What is Crawlability?

Crawlability is the ability of a search engine crawler, such as Googlebot, to access website pages and resources. Crawlability issues can negatively affect a website’s organic search rankings. It's essential to distinguish crawlability from indexability. The latter refers to the ability of a search engine to analyze a page and add it to its index. Only crawlable and indexable pages can be discovered and indexed by Google, meaning they can appear in search engine results.

Why is Crawlability Important?

Crawlability is vital for any website intended to receive organic search traffic. Crawlability allows search engines to crawl pages to read and analyze their content so it can be added to the search index. A page cannot be properly indexed without crawling. While Google can sometimes index a URL without crawling it based on the URL text and anchor text of its backlinks, the page title and description won’t show up on SERP.

Crawlability is not only important for Google. Other specific crawlers must crawl website pages for various reasons. For example, Ranktracker’s Site Audit bot crawls website pages to check for SEO health and report any SEO issues.

What Affects a Website’s Crawlability?

1. Page Discoverability

Before crawling a web page, a crawler must first discover that web page. Web pages that aren’t in the sitemap or lack internal links (known as orphan pages) can’t be found by the crawler and, therefore, can’t be crawled or indexed. If you want a page to be indexed, it must be included in the sitemap and have internal links (ideally both).

2. Nofollow Links

Googlebot does not follow links with the “rel=nofollow” attribute. If a page has, for example, only one nofollow link, it’s equal to having no links at all in terms of crawling.

3. Robots.txt File

A robots.txt file tells web crawlers which parts of your site they can and cannot access. If you want the page to be crawlable, it must not be disallowed in the robot.txt.

4. Access Restrictions

Web pages can have specific restrictions that keep crawlers from reaching them, such as:

- Some kind of login system

- User-agent blacklisting

- IP address blacklisting

How to Find Crawlability Issues on Your Website

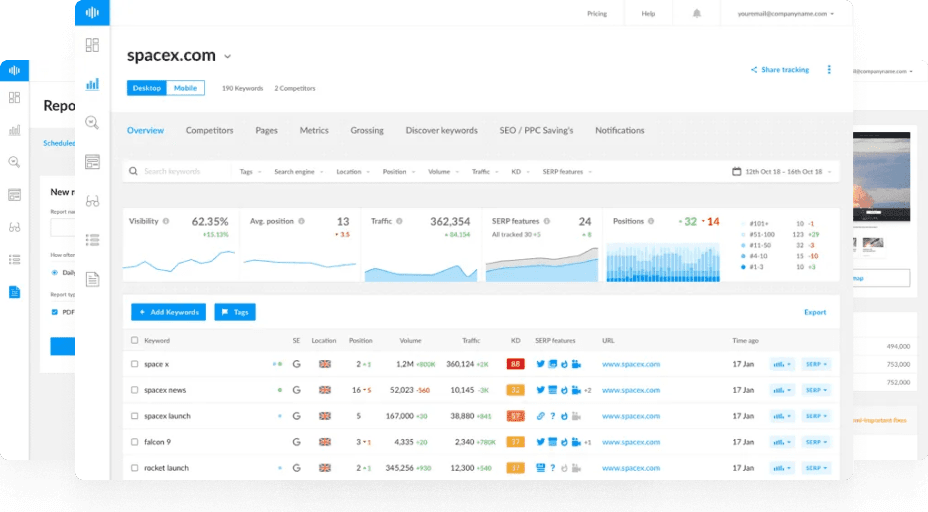

The easiest way to detect crawlability issues on a website is to use an SEO tool such as Ranktracker’s Site Audit or the free Ranktracker Webmaster Tools.

Ranktracker Webmaster Tools can crawl the whole website, keeping tabs on new or recurring issues over time. Additionally, it will break down issues into different categories, helping you better understand your site’s overall SEO performance and why your site cannot be crawled.

FAQs

What’s the Difference Between Crawlability and Indexability?

Crawlability is the ability of a search engine to access a web page and crawl its content. Indexability is the ability of a search engine to analyze the content it crawls to add it to its index. A page can be crawlable but not indexable.

Can a Webpage be Indexed in Google Without Crawling?

Surprisingly, Google can index a URL without crawling, allowing it to appear in search results. However, it’s a rare occurrence. When this happens, Google uses anchor text and URL text to determine the purpose and content focus of the page. Note that Google won’t show the page’s title in this case. This occurrence is briefly explained in Google’s Introduction to robots.txt.

For more insights on improving your website's SEO and ensuring proper crawlability, visit the Ranktracker Blog and explore our comprehensive SEO Guide. Additionally, familiarize yourself with key SEO terms and concepts in our SEO Glossary.