Intro

Generative search has changed how information appears online — and regulators around the world are responding. As AI becomes the interface to the web, new transparency requirements are emerging that dictate:

-

how AI systems must disclose their influence

-

when users must be informed of AI generation

-

what brands must reveal about AI usage in their content

-

how companies must communicate AI involvement in decisions, recommendations, or summaries

-

how generative engines must categorize and attribute information

These requirements impact everyone:

-

marketers

-

publishers

-

SEO and GEO professionals

-

SaaS companies

-

media organizations

-

regulated industries (finance, health, law)

This article explains the transparency landscape, outlines the disclosure requirements, and shows how to build GEO content that complies with AI transparency laws without sacrificing visibility.

Part 1: Why Transparency Matters in Generative Search

Generative engines have shifted authority from static pages to dynamic synthesis. This creates three challenges:

1. Users may not realize answers are AI-generated

This can lead to misplaced trust.

2. AI-generated content may obscure the original sources

Transparency becomes essential for fairness and attribution.

3. AI systems can influence user decisions

Regulators see this as a form of automated decision-making.

Transparency protects:

-

user autonomy

-

content creators

-

brand integrity

-

legal compliance

-

fair competition

In GEO, transparency is not just mandatory — it builds trust with both users and AI engines.

Part 2: Global Transparency Requirements You Must Understand

Across 2023–2025, governments introduced new AI transparency frameworks. The most influential include:

The EU AI Act

Requires disclosure when:

-

AI generates substantive content

-

AI influences decisions

-

users interact with AI-generated recommendations

-

synthetic media is involved

-

AI summarization affects user understanding

Companies must inform users that content was shaped or influenced by AI.

US Executive Orders on AI

Emphasize:

-

watermarking

-

provenance

-

content disclosure

-

safety classification for high-risk AI outputs

Brands must be able to show what content involved AI.

UK AI Governance Principles

Require:

-

transparency

-

explainability

-

identity disclosure

-

“meaningful human oversight”

Content influenced by AI must be clearly disclosed.

OECD AI Principles

Establish guidelines for:

-

transparency

-

accountability

-

safe AI deployment

-

data provenance

These principles strongly influence international law.

Platform-Specific Transparency (Google, OpenAI, Meta, TikTok, YouTube)

Platforms may require:

-

AI labels

-

automated content disclosures

-

origin tags

-

training influence tags

For publishers, this affects distribution and ranking.

Transparency is no longer voluntary — it is a formal compliance requirement.

Part 3: What “AI Influence” Means in a GEO Context

Transparency laws don’t just apply to content created by AI. They apply to content shaped, edited, or informed by AI systems.

AI influence includes:

-

generative writing

-

grammar correction

-

style rewriting

-

keyword drafting

-

outlining

-

summarization

-

content clustering

-

fact suggestion

-

metadata generation

-

image creation

-

data extraction

-

competitor analysis

-

passage optimization

If AI meaningfully changes the substance, framing, or structure of your content — that counts as influence.

And disclosure becomes required.

Part 4: Types of AI Influence That Must Be Disclosed

Here are the categories most regulators require disclosure for.

1. AI-Generated Text

Content written fully or partially by generative models.

2. AI-Assisted Writing or Editing

If AI rewrites, expands, or restructures your text.

3. AI-Generated Summaries

Summaries, meta descriptions, or snippets influenced by AI.

4. AI-Generated Images

Graphics, thumbnails, or illustrations made with GenAI tools.

5. AI-Powered Decision Support

AI-optimized recommendations or rankings (e.g., “best X tools”).

6. AI-Influenced Metadata

Titles, H1s, schema, alt tags, and keyword structures.

7. AI-Influenced Research

When AI extracts, clusters, or rewrites research insights.

8. Synthetic Personas or Fabricated Experts

Fake authors or fabricated credentials — prohibited under all frameworks.

9. Automated Product Reviews or Comparisons

AI-generated user opinion content — highly regulated.

10. AI Influence on Regulated Advice

Finance, health, law, insurance, or safety content must reveal AI participation.

Transparency ensures users know when content is shaped by non-human intelligence.

Part 5: Where Transparency Is Required

Depending on jurisdiction and platform, disclosure may be required in:

-

article headers

-

footnotes

-

About pages

-

modal tooltips

-

structured data

-

authorship fields

-

user-facing disclaimers

-

image metadata

-

sitemap entries

-

model cards (for developers)

The correct placement depends on the degree of influence.

Part 6: How Generative Engines Handle Transparency

Generative engines themselves must comply with transparency rules. This affects GEO visibility.

Google SGE

Labels “AI-generated” sections with info boxes.

Bing Copilot

Displays citations, provenance notes, and source indicators.

ChatGPT Browse

Announces a “summary generated by ChatGPT.”

Perplexity

Shows detailed source lists for transparency.

Claude

Includes disclaimers for uncertain or speculative content.

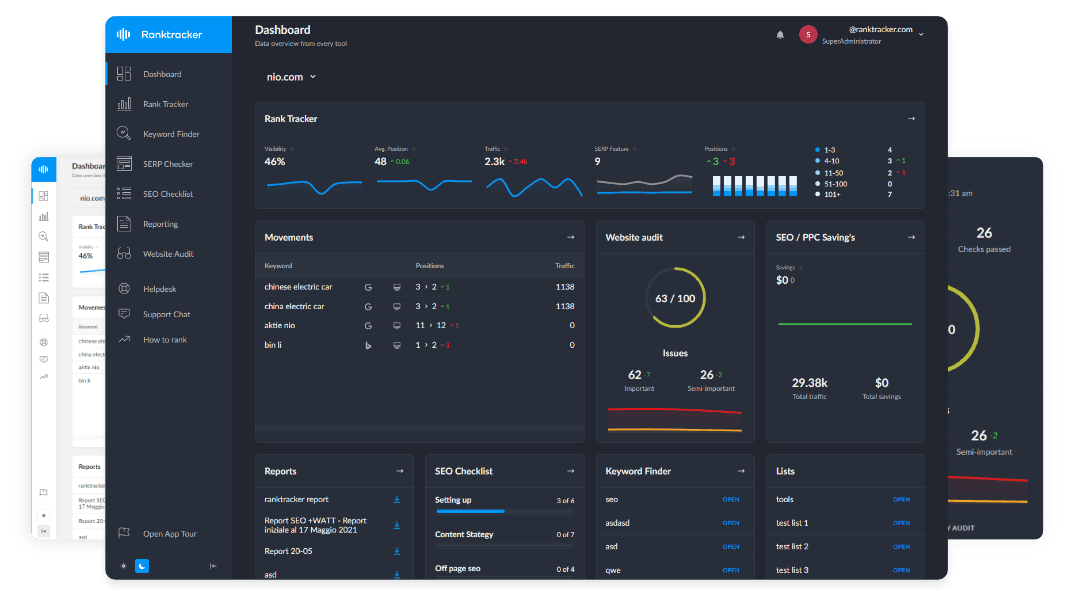

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Knowing this helps you align your GEO strategy with platform expectations.

Part 7: How To Comply With Transparency Requirements Without Hurting GEO

Brands need a balance:

too much disclosure → reduced authority too little disclosure → regulatory risk

Here is the optimal approach.

Strategy 1: Use Human-AI Hybrid Disclosure

Example:

This article was created with the assistance of AI tools and reviewed by human editors.

Short, simple, compliant.

Strategy 2: Place Disclosures in Non-Disruptive Locations

Use:

-

footer

-

end of article

-

About page

-

global site notice

Avoid disrupting the reading flow.

Strategy 3: Use Schema.org for Transparency

Add:

-

isBasedOn -

creator -

reviewedBy -

citation -

maintainer -

dateModified

Schema helps AI engines understand human involvement.

Strategy 4: Maintain Verified Human Authors

AI can assist — but humans must own:

-

expertise

-

experience

-

oversight

-

accountability

This increases GEO trust.

Strategy 5: Add Versioning & Timestamps

Engines prefer transparent updates.

Strategy 6: Document Your AI Usage Policy

Publish a page that clearly explains:

-

where AI is used

-

how content is reviewed

-

what teams oversee it

-

how accuracy is ensured

This improves trust and reduces risk.

Strategy 7: Maintain a High Fraction of Human-Created Value

Even with AI:

-

human insights

-

proprietary data

-

expert commentary

-

original analysis

are essential for GEO visibility.

Part 8: Transparency for Regulated Content (Health, Finance, Legal)

AI usage in these categories MUST be disclosed. Recommended practices:

-

add disclaimers

-

cite human experts

-

provide sources

-

include risk statements

-

avoid AI-generated recommendations

-

enforce strict human review

These signals protect both users and brands.

Part 9: Transparency Pitfalls to Avoid

1. Fake human authors

Illegal under most frameworks.

2. AI-generated images without disclosure

Especially problematic in journalism.

3. Not updating metadata after AI editing

Engines check timestamps.

4. Ambiguous disclaimers

Transparency must be clear and concise.

5. Overreliance on AI for sensitive topics

High regulatory exposure.

6. Using AI summaries as authoritative sources

Always verify accuracy.

Part 10: The Transparency Compliance Checklist (Copy/Paste)

General

-

Disclose AI-assisted content

-

Add global AI usage policy

-

Maintain human oversight documentation

-

Timestamp all updates

Content

-

Human-reviewed

-

Accurate and verified

-

No fabricated experts

-

Clear authorship

Metadata

-

Use Schema transparency fields

-

Structured data matches human author

-

Accurate modification dates

AI Tools

-

Document where AI is used

-

Validate outputs

-

Track version changes

Images

-

Disclose AI-generated illustrations

-

Verify no copyrighted training data issues

Regulated Industries

-

Add disclaimers

-

Human expert verification

-

Clear non-advisory statement

This checklist ensures compliance with global transparency frameworks.

Conclusion: Transparency Is Now Part of GEO Governance

Generative search has made transparency essential — not optional. Users deserve to know when AI influences the content they read. Regulators require disclosure to protect fairness and safety. Generative engines reward transparency with trust and citation stability.

By disclosing AI influence clearly, consistently, and intelligently, brands:

-

build credibility

-

protect themselves legally

-

maintain visibility

-

strengthen entity authority

-

ensure compliance

-

support user trust

-

future-proof their GEO strategy

Transparency isn’t just a rule. It’s a competitive advantage in the AI-first web.