Intro

XML sitemaps and robots.txt files are essential components of technical SEO. They help search engines crawl and index your website effectively, improving visibility and rankings. Understanding how to configure them correctly can enhance your site’s performance in search results.

1. What is an XML Sitemap?

An XML sitemap is a structured file that lists all important URLs on your website, helping search engines discover and prioritize content.

Benefits of XML Sitemaps:

- Ensures search engines can find all key pages, even those not linked internally.

- Helps with faster indexing of new or updated content.

- Provides metadata about each page, such as the last modified date and priority.

How to Create an XML Sitemap:

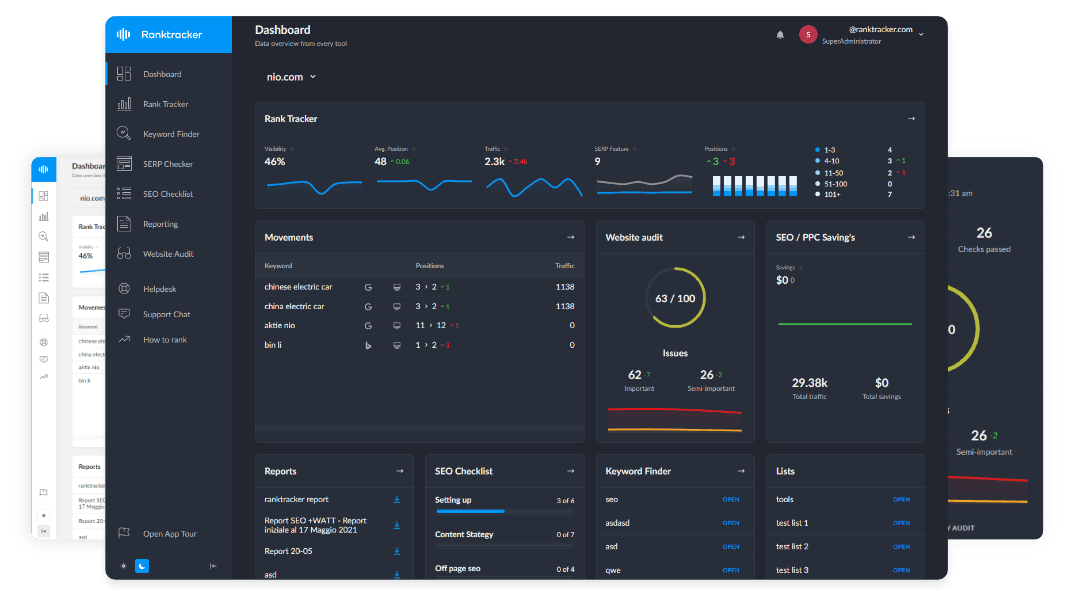

- Use tools like Ranktracker’s Web Audit or Yoast SEO (for WordPress users).

- Ensure only indexable pages are included—avoid duplicate or thin content.

- Submit the sitemap to Google Search Console and Bing Webmaster Tools.

2. What is a Robots.txt File?

The robots.txt file instructs search engine crawlers on which pages they can or cannot access.

Key Functions of Robots.txt:

- Prevents crawling of private or irrelevant pages (e.g., admin areas, login pages).

- Reduces server load by restricting unnecessary crawling.

- Helps avoid duplicate content issues by blocking parameterized URLs.

Best Practices for Robots.txt:

- Use Disallow directives to prevent crawling of sensitive pages.

- Keep it accessible at

yourdomain.com/robots.txt. - Allow important pages to be crawled and indexed for better SEO.

3. How XML Sitemaps & Robots.txt Work Together

Both files serve different purposes but complement each other in technical SEO.

How They Work Together:

- XML Sitemap: Lists all essential pages for indexing.

- Robots.txt: Tells search engines which pages to crawl or ignore.

- Ensure robots.txt does not block the XML sitemap, which can prevent proper indexing.

4. Common Mistakes to Avoid

Misconfigurations can negatively impact SEO, so avoid these mistakes:

Common Errors:

- Blocking essential pages in robots.txt (e.g.,

Disallow: /blog/). - Not updating XML sitemaps when adding or removing pages.

- Listing non-canonical or duplicate pages in the XML sitemap.

- Using Disallow on resources (CSS, JS) that impact page rendering.

Final Thoughts

A properly configured XML sitemap and robots.txt file ensure efficient crawling and indexing of your website. By following best practices, you can enhance your site’s SEO performance and improve search visibility.