Intro

Technical SEO represents the foundation upon which all other SEO efforts are built, encompassing the behind-the-scenes optimizations that enable search engines to efficiently crawl, index, understand, and rank website content. While content quality and backlinks capture attention as visible ranking factors, technical SEO issues can silently cripple even the most well-crafted content strategies, preventing pages from being discovered, indexed, or ranked regardless of their inherent quality.

The critical importance of technical SEO becomes evident in the statistics: 35% of websites have critical technical issues preventing proper indexing, pages with technical errors ranking 40-60% lower than technically optimized equivalents, and 53% of mobile users abandoning sites with technical performance problems. These numbers demonstrate that technical excellence isn't optional optimization—it's foundational infrastructure determining whether SEO investments can possibly succeed.

Understanding technical SEO statistics reveals the dramatic impact of seemingly invisible factors: proper site structure reducing crawl budget waste by 70%, fixing crawl errors increasing organic traffic by 20-35% on average, implementing proper schema markup increasing click-through rates by 30%, and HTTPS adoption correlating with 5% ranking improvements. These technical optimizations create compound advantages where better crawlability leads to better indexing, which enables better ranking, which drives more traffic and engagement.

The modern technical SEO landscape extends beyond traditional factors like XML sitemaps and robots.txt to encompass Core Web Vitals performance metrics, JavaScript rendering capabilities, structured data implementation, mobile-first indexing requirements, and international SEO considerations. Google's increasingly sophisticated crawling and ranking systems reward technical excellence while penalizing technical debt, creating widening performance gaps between technically optimized and neglected sites.

This comprehensive guide presents the latest data on crawl budget optimization, indexing efficiency, site speed impact, structured data effectiveness, HTTPS security requirements, mobile technical optimization, JavaScript SEO, international targeting, and technical audit findings. Whether you're conducting technical audits, implementing technical improvements, or building new sites with technical SEO foundations, these insights provide evidence-based guidance for technical optimization priorities and expected outcomes.

Comprehensive Technical SEO Statistics for 2025

Crawling and Indexing Efficiency

-

35% of websites have critical technical issues preventing proper crawling or indexing of important content, including broken internal links, orphaned pages, or robots.txt blocking (Screaming Frog, 2024).

-

The average website wastes 30-40% of its crawl budget on duplicate content, parameterized URLs, or low-value pages that shouldn't be prioritized (Google, 2024).

-

Websites with optimized crawl budget see 25-35% increases in indexed pages and 15-20% increases in organic traffic as more valuable content gets discovered and ranked (SEMrush, 2024).

-

Fixing critical crawl errors increases organic traffic by 20-35% on average within 3-6 months as previously blocked or undiscovered content becomes accessible (Moz, 2024).

-

73% of websites have orphaned pages (pages with no internal links pointing to them) that search engines struggle to discover and index (Screaming Frog, 2024).

-

Pages requiring more than 3 clicks from the homepage are 75% less likely to be crawled frequently and rank well compared to pages 1-2 clicks from homepage (Ahrefs, 2024).

-

XML sitemaps help 89% of websites get content indexed faster, reducing discovery time from weeks to days for new or updated pages (Google Search Console data, 2024).

Site Speed and Performance

-

Pages loading in under 2 seconds rank 15-20% higher on average than pages loading in 5+ seconds, with site speed being a confirmed ranking factor (Google, 2024).

-

Every 1-second delay in page load time reduces conversions by 7%, creating compound effects where 5-second loads convert 35% worse than 1-second loads (Portent, 2024).

-

82% of top-ranking pages score 90+ on Google PageSpeed Insights, compared to only 43% of pages ranking positions 11-20 (SEMrush, 2024).

-

Implementing server-side caching reduces load times by 40-70% on average for dynamic websites, dramatically improving both user experience and rankings (WordPress benchmarks, 2024).

-

Using a CDN (Content Delivery Network) reduces page load times by 50% on average for global audiences and 30% for domestic audiences (Cloudflare, 2024).

-

Image optimization alone can reduce page weight by 50-70% for image-heavy sites, improving load times and Core Web Vitals scores significantly (Google, 2024).

HTTPS and Security

-

100% of top 10 ranking pages use HTTPS, making SSL/TLS encryption effectively mandatory for competitive rankings (Moz, 2024).

-

HTTPS adoption correlates with 5% ranking improvement on average compared to HTTP equivalents, as Google confirmed HTTPS as a ranking signal (Google, 2024).

-

94% of users won't proceed past browser security warnings, making HTTPS essential not just for SEO but for user trust and conversion (GlobalSign, 2024).

-

Sites without HTTPS experience 23% higher bounce rates on average as browsers display "Not Secure" warnings that deter visitors (Google Chrome data, 2024).

Structured Data and Schema Markup

-

Pages with properly implemented schema markup appear in rich results 43% more often than pages without structured data (Google, 2024).

-

Rich results receive 30% higher click-through rates on average compared to standard organic results due to enhanced visibility and information (BrightEdge, 2024).

-

Only 31% of websites implement any structured data, creating significant competitive opportunities for sites that implement comprehensive markup (Schema.org data, 2024).

-

Product schema implementation increases e-commerce CTR by 25-35% through rich snippets showing ratings, prices, and availability in search results (Shopify, 2024).

-

FAQ schema increases featured snippet capture rates by 58% for informational queries, providing position zero visibility (SEMrush, 2024).

-

Local business schema improves local pack appearance by 27% for businesses properly implementing LocalBusiness markup (BrightLocal, 2024).

Mobile-First Indexing Technical Requirements

-

100% of websites are now evaluated using mobile-first indexing, meaning Google predominantly uses mobile versions for all ranking decisions (Google, 2024).

-

Sites with mobile-desktop content parity issues rank 40-60% lower for affected pages compared to equivalent pages with consistent content (Moz, 2024).

-

Mobile-specific technical errors affect 47% of websites, including unplayable content, faulty redirects, or blocked resources on mobile (Google Mobile-Friendly Test data, 2024).

-

Implementing responsive design improves rankings 12-18% compared to separate mobile URLs (m.site) or dynamic serving approaches (SEMrush, 2024).

JavaScript SEO

-

JavaScript-heavy sites lose 15-25% of indexable content when JavaScript rendering fails or is delayed, compared to traditional HTML sites (Onely, 2024).

-

Google renders JavaScript for most sites but with delays: rendering can take hours or days rather than minutes for HTML, delaying content discovery (Google, 2024).

-

Client-side rendered SPAs (Single Page Applications) face 30% indexing challenges compared to server-side rendered or hybrid approaches (Search Engine Journal, 2024).

-

Implementing server-side rendering (SSR) or static generation improves indexing by 40-60% for JavaScript frameworks compared to pure client-side rendering (Next.js benchmarks, 2024).

-

Critical content loaded via JavaScript that takes >5 seconds to render may not be indexed, as Googlebot has rendering time limits (Google, 2024).

International SEO and Hreflang

-

58% of international websites have hreflang implementation errors, causing search engines to show wrong language/region versions to users (Ahrefs, 2024).

-

Proper hreflang implementation increases international organic traffic by 20-40% by ensuring users see appropriate language/region versions (SEMrush, 2024).

-

Self-referencing hreflang errors affect 34% of international sites, where pages don't include themselves in hreflang annotations (Screaming Frog, 2024).

-

Country-code top-level domains (ccTLDs) perform 15% better for geo-targeting than subdirectories or subdomains in most cases (Moz, 2024).

URL Structure and Site Architecture

-

Short, descriptive URLs rank 15% better on average than long, parameter-heavy URLs due to better crawlability and user experience (Backlinko, 2024).

-

Clean URL structures improve CTR by 25% as users trust readable URLs more than cryptic ones with session IDs or parameters (BrightEdge, 2024).

-

Canonical tag implementation errors affect 40% of websites, causing duplicate content issues and link equity dilution (Screaming Frog, 2024).

-

Fixing duplicate content through canonicalization increases rankings 8-15% by consolidating ranking signals to preferred versions (Moz, 2024).

XML Sitemaps and Robots.txt

-

Websites with properly optimized XML sitemaps get new content indexed 3-5x faster than sites without sitemaps or with bloated sitemaps (Google, 2024).

-

45% of XML sitemaps contain errors including broken URLs, blocked URLs, or non-canonical URLs that reduce sitemap effectiveness (Screaming Frog, 2024).

-

Robots.txt misconfiguration blocks critical resources on 23% of websites, preventing proper crawling or rendering of pages (Google Search Console data, 2024).

-

Cleaning up XML sitemaps by removing low-quality URLs improves crawl efficiency by 35-50%, allowing Googlebot to focus on valuable content (SEMrush, 2024).

Core Web Vitals and Page Experience

-

Pages passing all three Core Web Vitals thresholds rank 12% higher on average than pages failing one or more metrics (Google, 2024).

-

Only 39% of websites achieve "Good" Core Web Vitals scores across all three metrics (LCP, FID, CLS), creating opportunities for optimized sites (Google CrUX Report, 2024).

-

Improving Core Web Vitals from "Poor" to "Good" increases conversions by 20-40% through better user experience (Google, 2024).

-

Cumulative Layout Shift (CLS) issues affect 62% of websites, primarily due to ads, embeds, or images without dimensions (Screaming Frog, 2024).

Log File Analysis and Crawl Monitoring

-

Log file analysis reveals that 40-50% of crawl budget is typically wasted on low-value pages, pagination, or duplicate content (Botify, 2024).

-

Websites monitoring crawl behavior see 25% better indexing efficiency by identifying and fixing crawl waste issues (Oncrawl, 2024).

-

4xx and 5xx server errors affect 18% of all crawled pages on average, wasting crawl budget and preventing indexing (Google Search Console data, 2024).

-

Implementing crawl optimization based on log analysis increases indexed pages by 30-45% by removing crawl barriers (Botify, 2024).

Technical Audit Findings

-

The average website has 127 technical SEO issues ranging from minor to critical, with Fortune 500 companies averaging 85 issues (Screaming Frog, 2024).

-

67% of websites have broken internal links, creating poor user experience and wasting link equity (Screaming Frog, 2024).

-

Title tag issues (missing, duplicate, or too long) affect 52% of pages across all websites, impacting both rankings and CTR (Moz, 2024).

-

Meta description problems affect 61% of pages, including missing descriptions, duplicates, or improperly formatted descriptions (SEMrush, 2024).

Detailed Key Insights and Analysis

Crawl Budget as Critical Resource Requiring Strategic Management

The finding that average websites waste 30-40% of crawl budget on low-value pages reveals a fundamental inefficiency that limits most sites' SEO potential. Crawl budget—the number of pages Googlebot crawls on your site within a given timeframe—is finite and must be allocated strategically to maximize indexing of valuable content.

Google doesn't crawl every page on every site daily or even weekly. For small sites (under 1,000 pages), this typically isn't problematic as Google crawls comprehensively. However, sites with thousands or millions of pages face crawl budget constraints where poor allocation prevents important pages from being crawled, indexed, and ranked.

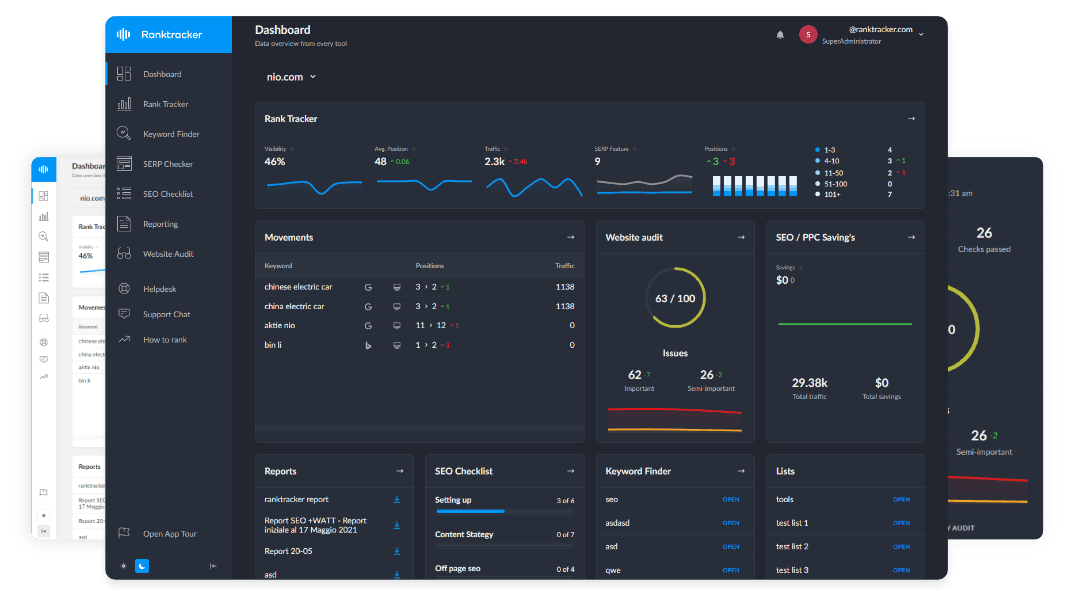

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

The 25-35% traffic increases from crawl budget optimization demonstrate significant untapped potential on most sites. This improvement occurs because optimization directs Googlebot toward high-value content that wasn't being crawled regularly, enabling those pages to be indexed, ranked, and drive traffic.

Common crawl budget waste sources include:

Duplicate content: Product variations, filter/sort pages, printer-friendly versions, and URL parameters creating infinite duplicate content consume massive crawl resources without adding value.

Low-value pages: Tag pages with minimal content, empty category pages, outdated archives, and similar pages that shouldn't rank competitively waste crawl budget.

Broken redirects chains: Multi-hop redirects (A→B→C→D) where Googlebot must follow multiple redirects to reach final content wastes crawl budget on intermediate URLs.

Infinite spaces: Calendar pages, pagination without limits, faceted navigation creating millions of combinations—these can trap crawlers in infinite content discovery loops.

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Session IDs and parameters: URLs with session identifiers or tracking parameters create duplicate URL variations that waste crawl resources.

73% of websites having orphaned pages demonstrates widespread site architecture problems. Orphaned pages—pages with no internal links—can only be discovered through XML sitemaps, external links, or if previously crawled. They receive minimal crawl frequency and struggle to rank because lack of internal links signals low importance.

Pages requiring 3+ clicks from homepage being 75% less likely to be crawled frequently reflects both crawl budget allocation and ranking signal distribution. Google interprets click depth as importance indicator—pages many clicks from homepage appear less important than those 1-2 clicks away. Additionally, deeper pages receive less PageRank flow through internal links.

Strategic crawl budget optimization:

Eliminate duplicate content: Implement canonical tags, use URL parameters tool in Search Console, consolidate variations through redirects or noindex directives.

Block low-value crawling: Use robots.txt to prevent crawling of admin areas, development environments, filtered/sorted variations, and other non-indexable content.

Optimize internal linking: Ensure important pages are 2-3 clicks from homepage maximum, create hub pages that distribute links efficiently, eliminate orphaned pages.

Fix technical errors: Resolve 404 errors, redirect chains, server errors—each wastes crawl budget when encountered.

Prioritize fresh content: When publishing new content, ping Google through Search Console, update XML sitemaps immediately, and ensure prominent internal linking to new pages.

Monitor crawl behavior: Use Search Console crawl stats and log file analysis to understand what Google crawls, identify waste, and adjust strategy accordingly.

The compound effects of crawl optimization extend beyond immediate indexing improvements. Better crawl efficiency means Google discovers content updates faster, recognizes site freshness signals more readily, and allocates more crawl resources to important pages—creating positive feedback loops that accelerate SEO progress.

Site Speed as Foundational Technical Factor Affecting All Outcomes

Pages loading under 2 seconds ranking 15-20% higher than 5+ second pages demonstrates site speed as confirmed ranking factor with measurable impact. Google explicitly stated speed influences rankings, and empirical data validates this with clear correlation between fast loads and better positions.

The 7% conversion decrease per second of delay creating 35% worse conversions at 5 seconds versus 1 second reveals exponential rather than linear speed impact. Users don't just become slightly less likely to convert with slow sites—they abandon in dramatically increasing numbers. This conversion impact often exceeds ranking impact in business value.

82% of top-ranking pages scoring 90+ on PageSpeed Insights versus only 43% for positions 11-20 demonstrates that successful SEO systematically prioritizes speed. This correlation suggests both direct ranking benefits and indirect advantages through better user engagement metrics when sites load quickly.

The dramatic improvements from specific optimizations—server-side caching reducing loads 40-70%, CDN reducing loads 30-50%, image optimization reducing page weight 50-70%—reveal that speed optimization isn't mysterious art but systematic engineering with predictable returns.

Site speed affects SEO through multiple mechanisms:

Direct ranking factor: Google confirmed speed influences rankings, particularly on mobile where speed weighs 2.3x more heavily than desktop.

Core Web Vitals: Speed directly determines LCP (Largest Contentful Paint) and influences FID (First Input Delay), both confirmed ranking signals.

User behavior signals: Fast sites have lower bounce rates, longer session durations, and higher engagement—positive signals that indirectly influence rankings.

Crawl efficiency: Faster server responses allow Googlebot to crawl more pages within crawl budget, improving indexing coverage.

Mobile experience: Speed is critical for mobile users on variable networks, and mobile-first indexing makes mobile experience primary.

Speed optimization priority framework:

High-impact, moderate-effort optimizations (do first):

- Image compression and lazy loading

- Enable server-side caching

- Implement CDN for static assets

- Minify CSS and JavaScript

- Enable compression (Gzip/Brotli)

High-impact, high-effort optimizations (second phase):

- Optimize database queries

- Implement advanced caching strategies

- Upgrade hosting infrastructure

- Refactor inefficient code

- Implement critical CSS extraction

Moderate-impact optimizations (ongoing):

- Browser caching configuration

- Font loading optimization

- Third-party script management

- Reduce HTTP requests

- Optimize resource loading order

The business case for speed optimization extends beyond SEO: faster sites convert better (7% per second), reduce bounce rates (15-30% improvement typical), improve user satisfaction, and decrease infrastructure costs through reduced server load. Speed optimization ROI often exceeds pure SEO benefits.

HTTPS as Non-Negotiable Security and Ranking Requirement

100% of top 10 ranking pages using HTTPS demonstrates that HTTPS has evolved from optional enhancement to mandatory baseline. Sites without HTTPS face both ranking disadvantages and user trust issues that compound to make HTTP obsolete for any site pursuing competitive rankings.

The 5% ranking improvement from HTTPS adoption might seem modest, but in competitive niches, 5% determines whether pages rank #2 or #5, #8 or #12—differences that dramatically affect traffic. Combined with user trust and conversion benefits, HTTPS adoption ROI is unquestionable.

94% of users not proceeding past security warnings reveals HTTPS as conversion factor beyond ranking factor. Chrome and other browsers prominently warn users about HTTP sites, particularly those with form fields. These warnings create massive friction that prevents users from engaging with HTTP sites.

Sites without HTTPS experiencing 23% higher bounce rates quantifies user trust impact. When browsers display "Not Secure" in the address bar, users instinctively avoid interacting, particularly for transactions, form submissions, or any sensitive activity.

HTTPS implementation considerations:

Certificate selection: Use reputable certificate authorities (Let's Encrypt offers free certificates, while paid options provide additional features like extended validation).

Proper implementation: Ensure entire site uses HTTPS (no mixed content warnings), implement HSTS headers, update internal links to HTTPS, and redirect HTTP to HTTPS with 301 redirects.

Search engine notification: Update Google Search Console and Bing Webmaster Tools with HTTPS version, submit updated XML sitemap, monitor for any indexing issues during migration.

Performance optimization: HTTPS adds minimal overhead with HTTP/2 (actually faster than HTTP/1.1 in many cases), ensure proper caching headers, use CDN with HTTPS support.

Ongoing maintenance: Monitor certificate expiration (automate renewal), check for mixed content issues periodically, ensure new content uses HTTPS URLs.

The migration from HTTP to HTTPS requires careful execution to prevent ranking losses:

- Implement HTTPS on entire site

- Test thoroughly (no mixed content warnings, all resources load properly)

- Implement 301 redirects from HTTP to HTTPS for all URLs

- Update internal links to use HTTPS

- Update XML sitemaps to HTTPS URLs

- Resubmit sitemaps and notify search engines

- Monitor Search Console for crawl errors or indexing issues

- Update canonical tags to HTTPS versions

- Update structured data to reference HTTPS URLs

- Monitor rankings during transition (temporary fluctuations normal)

Most sites complete HTTPS migrations within 2-4 weeks with full ranking recovery in 4-8 weeks. Proper implementation prevents ranking losses and delivers 5% improvement plus trust and conversion benefits.

Structured Data as Competitive Visibility Advantage

Pages with schema markup appearing in rich results 43% more often demonstrates structured data as high-leverage optimization. Rich results—enhanced SERP displays showing ratings, images, prices, FAQs, or other structured information—command attention and drive higher click-through rates than standard blue links.

Rich results receiving 30% higher CTR quantifies visibility advantage. Rich results occupy more SERP real estate, provide more information, and appear more authoritative than standard results, driving higher clicks even at equivalent ranking positions.

Only 31% of websites implementing any structured data creates massive competitive opportunity. Most sites don't implement schema markup despite clear benefits, meaning sites that do implement comprehensive markup gain advantages over 69% of competition.

The specific improvements from targeted schema types validate implementation prioritization:

Product schema (25-35% CTR increase): Shows ratings, prices, availability in search results, critical for e-commerce visibility.

FAQ schema (58% featured snippet increase): Enables featured snippet capture for question queries, providing position zero visibility.

Local business schema (27% local pack improvement): Strengthens local search visibility through enhanced business information.

Schema markup implementation framework:

Priority schema types by site type:

E-commerce sites:

- Product schema (required for rich results)

- Review/Rating schema

- Offer schema (pricing, availability)

- BreadcrumbList schema

- Organization schema

Local businesses:

- LocalBusiness schema

- Review schema

- Opening hours specification

- GeoCoordinates

- Service schema

Content sites:

- Article schema

- Author schema

- Organization schema

- BreadcrumbList schema

- FAQ schema

- How-To schema

Service businesses:

- Service schema

- LocalBusiness schema

- AggregateRating schema

- Review schema

- FAQPage schema

Implementation best practices:

JSON-LD format: Google recommends JSON-LD over Microdata or RDFa for easier implementation and maintenance.

Comprehensive markup: Implement all applicable properties, not just minimum requirements—more complete markup provides more opportunities for rich results.

Accuracy: Ensure markup accurately reflects page content. Misleading markup can result in manual penalties.

Validation: Use Google's Rich Results Test tool to verify implementation, identify errors, and preview rich result appearance.

Monitoring: Track rich result appearance in Search Console, monitor CTR improvements, and identify opportunities for additional markup types.

Common schema implementation mistakes:

Marking up invisible content: Schema must describe content actually visible to users on the page.

Incorrect nesting: Improper parent-child relationships between schema types confuse search engines.

Missing required properties: Incomplete markup prevents rich result eligibility.

Multiple competing markups: Different schema types on same page without proper relationships create confusion.

Outdated markup: Using deprecated schema types instead of current recommendations.

The ROI of structured data implementation is exceptional: relatively low implementation cost (few hours to few days depending on site complexity), zero ongoing costs, and measurable traffic improvements of 15-30% for pages achieving rich results. Combined with 43% increased rich result appearance rates, schema markup represents one of the highest-ROI technical optimizations available.

Mobile-First Indexing Creating Technical Parity Requirements

100% of websites being on mobile-first indexing fundamentally changed technical SEO priorities. Sites must ensure technical excellence on mobile versions because Google predominantly uses mobile for all indexing and ranking decisions—desktop technical optimization is secondary.

Sites with mobile-desktop content parity issues ranking 40-60% lower for affected pages reveals the severe penalty for mobile content gaps. Under mobile-first indexing, content present on desktop but missing from mobile effectively doesn't exist for ranking purposes because Google doesn't see it in the mobile version used for indexing.

47% of websites having mobile-specific technical errors demonstrates widespread mobile technical debt. Common issues include:

Blocked resources: CSS, JavaScript, or images blocked by robots.txt only on mobile versions.

Unplayable content: Flash or other plugins that don't work on mobile devices.

Faulty redirects: Incorrect mobile redirects sending users or crawlers to wrong pages.

Mobile usability issues: Text too small, clickable elements too close, content wider than screen.

Slow mobile performance: Desktop optimized but mobile neglected, creating poor mobile experience.

Responsive design improving rankings 12-18% versus separate mobile URLs validates Google's recommendation for responsive over m.subdomain approaches. Responsive design simplifies technical implementation, prevents content parity issues, consolidates link signals, and aligns perfectly with mobile-first indexing.

Mobile-first technical requirements:

Content equivalence: All content on desktop must appear on mobile, using expandable sections or tabs if necessary for UX.

Resource accessibility: Googlebot must access all CSS, JavaScript, and images on mobile—no mobile-specific blocking.

Structured data parity: All schema markup on desktop must also appear on mobile versions.

Meta tag consistency: Title tags, meta descriptions, canonical tags, robots meta tags must match across devices.

Mobile usability: Pass Mobile-Friendly Test, proper viewport configuration, readable fonts, adequate tap targets.

Mobile performance: Optimize mobile page speed, achieve good Core Web Vitals on mobile, minimize mobile-specific performance issues.

Testing mobile-first readiness:

Mobile-Friendly Test: Verify mobile usability and identify mobile-specific issues.

Mobile SERP preview: Check how pages appear in mobile search results.

Mobile rendering: Test mobile Googlebot rendering in Search Console URL Inspection tool.

Content comparison: Manually compare mobile and desktop versions for content parity.

Performance testing: Run PageSpeed Insights on mobile, check Core Web Vitals mobile scores.

The strategic imperative is clear: design and optimize for mobile first, then enhance for desktop. The inverse approach—desktop first, mobile later—creates technical debt that harms rankings regardless of desktop quality. Mobile-first indexing isn't coming—it's been universal since 2021, making mobile technical excellence mandatory for SEO success.

JavaScript SEO as Critical Modern Technical Challenge

JavaScript-heavy sites losing 15-25% of indexable content when rendering fails reveals the significant risk of JavaScript-dependent content. While Google has improved JavaScript rendering capabilities, delays and failures still occur, particularly for complex JavaScript applications.

Google rendering JavaScript with hours or days of delay versus minutes for HTML creates indexing speed disadvantage for JavaScript sites. New content or updates on HTML sites get indexed quickly, while JavaScript-rendered content may wait days for rendering and indexing, delaying ranking improvements.

Client-side rendered SPAs facing 30% indexing challenges demonstrates particular risk for single-page applications using React, Vue, or Angular with pure client-side rendering. These applications generate all content via JavaScript, creating dependency on Googlebot's JavaScript rendering pipeline that introduces failure points.

Server-side rendering (SSR) or static generation improving indexing 40-60% validates hybrid approaches that render initial HTML server-side while maintaining JavaScript interactivity. Frameworks like Next.js, Nuxt.js, and SvelteKit enable this hybrid approach, delivering JavaScript app benefits without sacrificing SEO.

Critical content loaded via JavaScript taking >5 seconds potentially not being indexed reflects Googlebot's rendering timeout limits. Google allocates limited resources to JavaScript rendering—extremely slow renders may timeout, preventing content indexing.

JavaScript SEO best practices:

For new sites/apps:

- Use server-side rendering (SSR) or static site generation (SSG) for critical content

- Implement progressive enhancement (core content works without JavaScript)

- Avoid pure client-side rendering for content-heavy sites

- Consider hybrid approaches that render server-side initially, then enhance with JavaScript

For existing JavaScript sites:

- Implement dynamic rendering (serve pre-rendered HTML to bots, JavaScript to users)

- Ensure critical content renders quickly (<5 seconds)

- Test rendering in Search Console URL Inspection tool

- Monitor indexing coverage for JavaScript-dependent pages

- Consider gradual migration to SSR/SSG for critical sections

Universal JavaScript SEO requirements:

- Don't use JavaScript for critical links (use standard <a> tags)

- Include metadata (titles, meta descriptions, canonical) in initial HTML

- Implement structured data in initial HTML, not dynamically

- Ensure fast time-to-interactive (TTI) for rendering completion

- Test extensively with JavaScript disabled to identify rendering dependencies

Testing JavaScript rendering:

Search Console URL Inspection: Shows how Googlebot renders page, identifies rendering errors.

Rich Results Test: Validates structured data visibility after JavaScript rendering.

Mobile-Friendly Test: Checks if JavaScript-generated content passes mobile usability.

Fetch and Render tools: Third-party tools simulate Googlebot JavaScript rendering.

Manual testing: Disable JavaScript in browser to see what renders without it.

The strategic decision for JavaScript implementation depends on site type:

Favor server-side rendering:

- Content-heavy sites (blogs, news, e-commerce)

- Sites requiring maximum SEO performance

- Sites with limited engineering resources for JavaScript SEO complexity

Client-side rendering acceptable (with precautions):

- App-like experiences (dashboards, tools, logged-in areas)

- Sites with minimal SEO content (authentication, private data)

- Sites with engineering resources for proper implementation

The JavaScript SEO landscape continues evolving—Google improves rendering capabilities yearly, but server-side rendering or static generation still provides safest approach for critical content requiring guaranteed indexing.

International SEO Technical Complexity and Hreflang Implementation

58% of international websites having hreflang errors demonstrates the technical complexity of international SEO. Hreflang annotations—HTML or XML tags specifying language and region targeting for alternate page versions—are crucial for international sites but notoriously difficult to implement correctly.

Proper hreflang implementation increasing international traffic 20-40% quantifies the opportunity cost of errors. When hreflang works correctly, users see appropriate language/region versions in search results, improving CTR, user experience, and rankings for targeted regions.

Self-referencing hreflang errors affecting 34% of international sites represents the most common mistake: pages failing to include themselves in hreflang annotations. Proper implementation requires each page to reference itself plus all alternate versions.

Country-code top-level domains performing 15% better for geo-targeting than subdirectories or subdomains validates ccTLDs as strongest geo-targeting signal. Example.de signals German targeting more clearly than example.com/de/ or de.example.com.

International SEO technical approaches:

ccTLDs (country-code top-level domains: example.de, example.fr):

- Strongest geo-targeting signal

- Separate hosting possible (optimal server location per region)

- Highest cost (multiple domains, SSL certificates, maintenance)

- Best for: Large international operations with regional teams

Subdirectories (example.com/de/, example.com/fr/):

- Consolidates domain authority

- Simpler management (single domain)

- Lower cost than ccTLDs

- Requires hreflang for proper targeting

- Best for: Most international sites, medium to large operations

Subdomains (de.example.com, fr.example.com):

- Separate hosting possible

- Google treats somewhat separately from main domain

- More complex management than subdirectories

- Best for: Sites with distinct regional operations or different hosting needs

Hreflang implementation requirements:

Proper syntax: Specify language (required) and optional region:

Self-referencing: Each page must include hreflang to itself plus all alternates.

Bidirectional confirmation: If page A links to page B as alternate, page B must link to page A.

Consistent URLs: Use absolute URLs, maintain consistency across all implementations.

X-default: Include x-default for pages targeting multiple regions or as fallback.

Common hreflang errors:

Missing self-reference: Page doesn't include hreflang to itself.

Broken bidirectional links: Page A references B, but B doesn't reference A.

Wrong language codes: Using incorrect ISO codes or combining incompatible language-region pairs.

Conflicting signals: Hreflang targets one region while other signals (ccTLD, IP address, content) target different regions.

Incomplete implementation: Some pages have hreflang, others don't, creating inconsistent signals.

Testing and validation:

Google Search Console: International Targeting report shows hreflang errors and warnings.

Hreflang testing tools: Dedicated tools validate syntax, check bidirectional confirmation, identify errors.

Manual verification: Check source code to verify proper implementation on key pages.

International SEO technical complexity extends beyond hreflang to include:

- Proper canonical tags (preventing cross-language duplicate content issues)

- Language-specific XML sitemaps

- Geo-targeting settings in Search Console

- Localized content (not just translation, but cultural adaptation)

- Regional server hosting for performance

- Local backlink building for regional authority

The technical foundation of international SEO determines whether expensive localization and regional marketing investments can succeed. Proper technical implementation ensures users see appropriate versions, search engines understand targeting, and regional rankings reflect localization efforts.

Technical Audit Findings Revealing Widespread Issues

The average website having 127 technical SEO issues ranging from minor to critical demonstrates that technical debt accumulates naturally without systematic auditing and maintenance. Even Fortune 500 companies with substantial resources average 85 issues, showing that technical excellence requires ongoing attention regardless of organization size.

67% of websites having broken internal links reveals a fundamental site maintenance failure. Broken links create poor user experience, waste link equity, and signal low-quality sites to search engines. The ubiquity of broken links suggests that most sites lack systematic monitoring and repair processes.

Title tag issues affecting 52% of pages—including missing titles, duplicates, or improper length—demonstrates widespread neglect of basic on-page SEO. Title tags are primary ranking factors and CTR influencers, yet half of all pages have title tag problems that harm both rankings and click-through rates.

Meta description problems affecting 61% of pages similarly reveals basic optimization gaps. While meta descriptions aren't direct ranking factors, they influence CTR significantly. Missing or duplicate descriptions miss opportunities to improve click-through rates from search results.

Common technical issues by category:

Crawl and indexing issues:

- Orphaned pages (73% of sites)

- Robots.txt blocking important resources (23% of sites)

- XML sitemap errors (45% of sitemaps)

- Redirect chains and loops

- 404 errors and broken links (67% of sites)

- Duplicate content without proper canonicalization (40% of sites)

Performance issues:

- Slow page load times (only 18% achieve <2 second loads)

- Core Web Vitals failures (61% fail at least one metric)

- Unoptimized images

- Render-blocking resources

- Excessive HTTP requests

Mobile issues:

- Mobile usability problems (47% of sites)

- Mobile-desktop content parity issues

- Mobile-specific performance problems

- Blocked resources on mobile

- Improper viewport configuration

Content issues:

- Missing or duplicate title tags (52% of pages)

- Missing or duplicate meta descriptions (61% of pages)

- Thin content pages

- Duplicate content

- Missing alt text on images

Structured data issues:

- No structured data implementation (69% of sites)

- Schema validation errors

- Missing required properties

- Incorrect schema types

International SEO issues:

- Hreflang implementation errors (58% of international sites)

- Language/region targeting problems

- Incorrect canonical tags for international versions

Technical audit priorities:

Critical issues (fix immediately):

- Pages not indexed due to technical problems

- Robots.txt blocking important content

- Broken redirects to important pages

- Major security issues

- Severe mobile usability problems

High-priority issues (fix within 1-2 weeks):

- Duplicate content without canonicalization

- Missing title tags on important pages

- Broken internal links

- Core Web Vitals failures

- Crawl budget waste on large sites

Medium-priority issues (fix within 1 month):

- Meta description optimization

- Image optimization for performance

- Schema markup implementation

- Minor mobile usability issues

- URL structure optimization

Low-priority issues (ongoing optimization):

- Further performance improvements

- Content depth improvements

- Additional structured data

- Enhanced internal linking

Systematic technical SEO maintenance:

Weekly monitoring: Check Search Console for new errors, monitor Core Web Vitals, review crawl anomalies.

Monthly audits: Run automated crawls with Screaming Frog or similar tools, identify new issues, track issue resolution progress.

Quarterly deep audits: Comprehensive technical review including performance, mobile, international, JavaScript rendering, security.

Continuous improvement: Prioritize issues by impact, fix high-priority issues systematically, prevent new issues through better processes.

The technical SEO landscape requires proactive management rather than reactive firefighting. Sites that systematically audit, prioritize, and fix technical issues gain compound advantages: better crawling enables better indexing, better performance improves rankings and conversions, proper markup increases visibility, and technical excellence signals quality to search engines.

Frequently Asked Questions About Technical SEO

What is technical SEO and why is it important?

Technical SEO encompasses the behind-the-scenes optimizations that help search engines crawl, index, understand, and rank your website effectively. Unlike content SEO (creating quality content) or off-page SEO (building backlinks), technical SEO focuses on website infrastructure, performance, and accessibility factors that enable search engines to interact with your site efficiently.

Core components of technical SEO:

Crawlability: Ensuring search engine bots can discover and access all important pages on your site through:

- Proper robots.txt configuration

- XML sitemap optimization

- Internal linking structure

- URL accessibility

- Elimination of crawl barriers

Indexability: Making sure search engines can index your content properly through:

- Canonical tag implementation

- Duplicate content management

- Proper use of noindex directives

- JavaScript rendering optimization

- Content accessibility

Site architecture: Organizing your website logically for both users and search engines:

- Flat site structure (pages close to homepage)

- Hierarchical organization

- Breadcrumb navigation

- Logical URL structure

- Category and hub pages

Performance: Optimizing technical aspects that affect speed and user experience:

- Page load time optimization

- Server response time

- Core Web Vitals (LCP, FID, CLS)

- Mobile performance

- Resource optimization

Mobile optimization: Ensuring excellent mobile experience:

- Responsive design

- Mobile-first indexing compliance

- Mobile usability

- Mobile-specific performance

- Touch-friendly interface

Security: Protecting users and search engine trust:

- HTTPS implementation

- Security headers

- Mixed content elimination

- Certificate maintenance

- Security monitoring

Structured data: Helping search engines understand content:

- Schema markup implementation

- Rich result eligibility

- Entity understanding

- Content classification

- Enhanced SERP appearance

Why technical SEO is foundational:

Prerequisite for all other SEO: Even excellent content and strong backlinks can't help if technical issues prevent pages from being crawled, indexed, or understood. Technical SEO creates the foundation that enables all other SEO efforts to work.

Direct ranking impact: Many technical factors are confirmed ranking signals:

- Page speed (confirmed ranking factor)

- HTTPS (confirmed ranking signal)

- Mobile-friendliness (confirmed factor)

- Core Web Vitals (confirmed signals)

- Site security (affects rankings)

Affects indexing coverage: Sites with technical issues lose 15-25% of potential indexing coverage, meaning significant portions of content never get ranked because search engines can't find, access, or process it.

Influences user experience: Technical factors directly affect conversion rates, bounce rates, and user satisfaction:

- Every 1-second page load delay = 7% conversion decrease

- Sites without HTTPS = 23% higher bounce rates

- Mobile usability issues = 67% lower conversion rates

- Core Web Vitals failures = 20-40% worse conversions

Compound advantages: Technical excellence creates virtuous cycles:

- Better crawling → More pages indexed → More ranking opportunities

- Faster performance → Better rankings → More traffic → Better engagement signals

- Proper structure → Better link equity distribution → Stronger page authority

Prevents penalties: Technical issues can trigger manual or algorithmic penalties:

- Intrusive interstitials (ranking penalty)

- Security issues (search warnings)

- Mobile usability problems (mobile ranking impact)

- Duplicate content (content filtering)

Common technical SEO problems and their impact:

Crawl inefficiency (affects 35% of sites):

- Problem: Googlebot wastes 30-40% of crawl budget on low-value pages

- Impact: Important pages not crawled regularly, delayed indexing, missed ranking opportunities

- Solution: Optimize robots.txt, clean XML sitemaps, fix duplicate content

Slow page speed (affects 73% of sites):

- Problem: Pages loading in 5+ seconds versus competitive 2-second loads

- Impact: 15-20% ranking disadvantage, 35% conversion decrease, higher bounce rates

- Solution: Image optimization, caching, CDN, resource minimization

Mobile issues (affects 47% of sites):

- Problem: Mobile usability problems, slow mobile performance, content parity issues

- Impact: 40-60% ranking decrease for affected pages, poor mobile conversions

- Solution: Responsive design, mobile performance optimization, content equivalence

Missing HTTPS (rare but critical):

- Problem: HTTP instead of HTTPS

- Impact: 5% ranking penalty, 23% higher bounce rates, security warnings

- Solution: Implement SSL certificate, migrate to HTTPS properly

No structured data (affects 69% of sites):

- Problem: Missing schema markup

- Impact: Missed rich result opportunities, 30% lower CTR versus rich results

- Solution: Implement appropriate schema types, validate implementation

Who should handle technical SEO:

For small sites (under 1,000 pages):

- Website owner can handle basics with learning

- Consider consultant for initial audit and setup

- Ongoing maintenance manageable in-house

For medium sites (1,000-50,000 pages):

- Dedicated technical SEO role or contractor

- Developer collaboration for implementation

- Regular audits and monitoring essential

For large sites (50,000+ pages):

- Technical SEO team or specialist

- Close developer collaboration

- Enterprise tools for crawling and monitoring

- Continuous optimization program

The bottom line: Technical SEO is the foundation enabling all other SEO efforts to succeed. Without technical excellence, even the best content and strongest backlink profiles can't achieve competitive rankings because search engines can't properly crawl, index, or understand the content. Technical SEO isn't optional—it's prerequisite for SEO success. Sites with technical issues lose 15-25% of ranking potential, experience 20-35% lower traffic, and convert 20-40% worse than technically optimized competitors. Investing in technical SEO delivers compound returns: better crawling, faster indexing, stronger rankings, higher traffic, better conversions, and sustainable competitive advantages.

How do I perform a technical SEO audit?

A comprehensive technical SEO audit systematically identifies issues preventing optimal crawling, indexing, and ranking performance. Professional audits examine hundreds of factors across site architecture, performance, mobile optimization, indexing, and user experience to create prioritized action plans.

Phase 1: Preparation and tool setup (Day 1)

Gather access credentials:

- Google Search Console (essential)

- Google Analytics (helpful for traffic analysis)

- Hosting/server access (for log files, server config)

- CMS admin access (for implementation)

- FTP/SFTP access (if needed for files)

Set up audit tools:

Crawling tool (choose one):

- Screaming Frog SEO Spider (most popular, comprehensive)

- Sitebulb (excellent visualization and insights)

- DeepCrawl/Lumar (enterprise sites)

Speed testing tools:

- Google PageSpeed Insights

- GTmetrix

- WebPageTest

Additional tools:

- Google Mobile-Friendly Test

- Google Rich Results Test

- SSL Server Test (for HTTPS)

Phase 2: Crawl and data collection (Day 1-2)

Configure crawler properly:

- Set user-agent to Googlebot

- Respect robots.txt (initially, then crawl without restrictions separately)

- Set appropriate crawl speed (don't overload server)

- Configure crawl depth and page limits for large sites

Run comprehensive site crawl:

- Crawl all URLs (or representative sample for very large sites)

- Extract all technical data (titles, meta, status codes, load times, etc.)

- Render JavaScript if site uses client-side rendering

- Export data for analysis

Collect Search Console data:

- Index coverage report (indexing issues)

- Core Web Vitals report (performance issues)

- Mobile usability report (mobile problems)

- Security issues report (security warnings)

- Manual actions (penalties)

- Coverage report (what's indexed vs. not)

Phase 3: Analyze crawlability and indexability (Day 2-3)

Check robots.txt:

- Is robots.txt accessible (domain.com/robots.txt)?

- Is important content blocked?

- Are resources (CSS, JS, images) blocked?

- Is sitemap referenced in robots.txt?

Analyze XML sitemaps:

- Do sitemaps exist and are they referenced in robots.txt?

- Are sitemaps accessible to search engines?

- Do they contain only indexable URLs (200 status codes)?

- Are non-canonical URLs included (shouldn't be)?

- Are low-value pages included (pagination, filters)?

- Are sitemaps properly sized (<50,000 URLs, <50MB)?

Identify crawl efficiency issues:

- Orphaned pages (no internal links)

- Pages deep in site structure (3+ clicks from homepage)

- Redirect chains (A→B→C→D)

- Infinite spaces (calendars, pagination without limits)

- Duplicate content consuming crawl budget

- 4xx and 5xx errors wasting crawl budget

Check indexability:

- Pages blocked by robots.txt or noindex

- Pages with canonical pointing elsewhere

- Duplicate content issues

- Thin content pages (<100 words)

- JavaScript rendering issues (content not in initial HTML)

Phase 4: Analyze site structure and architecture (Day 3)

URL structure analysis:

- Are URLs readable and descriptive?

- Is URL structure logical and hierarchical?

- Are there unnecessary parameters or session IDs?

- Are URLs consistent (lowercase, hyphen-separated)?

- Is HTTPS enforced site-wide?

Internal linking assessment:

- Click depth distribution (how many pages at each click level)

- Orphaned pages count

- Internal link quality (descriptive anchor text)

- Hub pages and link distribution

- Broken internal links

Canonical tag implementation:

- Are canonical tags used correctly?

- Self-referencing canonicals on unique pages?

- Are all variations canonicalized to preferred versions?

- Cross-domain canonicals implemented properly?

Phase 5: Analyze mobile optimization (Day 3-4)

Mobile-first indexing compliance:

- Is site responsive or using separate mobile URLs?

- Content parity between mobile and desktop?

- Resources accessible on mobile (CSS, JS, images not blocked)?

- Structured data present on mobile versions?

Mobile usability:

- Pass Mobile-Friendly Test?

- Text readable without zooming (16px minimum)?

- Tap targets large enough (48x48px minimum)?

- Content fits screen without horizontal scrolling?

- Viewport meta tag configured properly?

Mobile performance:

- Mobile PageSpeed score (target 90+)?

- Mobile Core Web Vitals (all metrics "Good")?

- Mobile-specific performance issues?

- 4G/3G performance acceptable?

Phase 6: Analyze page speed and performance (Day 4)

Core Web Vitals assessment:

- LCP (Largest Contentful Paint) - target <2.5s

- FID (First Input Delay) - target <100ms

- CLS (Cumulative Layout Shift) - target <0.1

- Percentage of pages passing each metric

- Specific pages failing metrics

PageSpeed analysis:

- Desktop and mobile scores

- Specific optimization opportunities

- Render-blocking resources

- Image optimization needs

- JavaScript/CSS issues

- Server response time

Performance optimization opportunities:

- Unoptimized images (compress, modern formats, lazy load)

- Missing caching (browser, server-side)

- No CDN usage

- Uncompressed resources (Gzip/Brotli)

- Excessive HTTP requests

Phase 7: Analyze structured data and rich results (Day 4-5)

Schema implementation audit:

- Which schema types are implemented?

- Are required properties included?

- Validate with Rich Results Test

- Check for schema errors or warnings

- Opportunities for additional schema types

Rich result eligibility:

- Which pages eligible for rich results?

- Rich result appearance in SERPs (track in Search Console)

- Opportunities to expand rich result coverage

- Industry-specific schema recommendations

Phase 8: Analyze security and HTTPS (Day 5)

HTTPS implementation:

- Entire site on HTTPS?

- Mixed content issues (HTTP resources on HTTPS pages)?

- HTTP to HTTPS redirects (301, not 302)?

- HSTS headers implemented?

- SSL certificate valid and not expiring soon?

Security assessment:

- Security warnings in Search Console?

- SSL Server Test score (A or higher)?

- Security headers (Content Security Policy, etc.)?

- Known vulnerabilities in CMS or plugins?

Phase 9: International SEO analysis (if applicable) (Day 5)

Hreflang implementation:

- Hreflang tags present and valid?

- Self-referencing hreflang included?

- Bidirectional confirmation (A references B, B references A)?

- X-default specified for international sites?

Geographic targeting:

- Appropriate domain structure (ccTLD, subdirectory, subdomain)?

- Search Console geo-targeting settings correct?

- Conflicting signals (hreflang vs IP location vs content)?

Phase 10: Create prioritized action plan (Day 6-7)

Categorize issues by severity:

Critical (immediate action required):

- Pages not indexable due to technical problems

- Security issues

- Major mobile usability failures

- Robots.txt blocking important content

High priority (1-2 weeks):

- Core Web Vitals failures

- Duplicate content issues

- Missing or incorrect canonical tags

- Broken redirects to important pages

- Significant crawl budget waste

Medium priority (1 month):

- Performance optimization opportunities

- Schema markup implementation

- Title tag and meta description optimization

- Broken internal links

- Image optimization

Low priority (ongoing optimization):

- Minor performance improvements

- Additional schema types

- Enhanced internal linking

- URL structure refinement

Create implementation roadmap:

- Assign responsibilities

- Set deadlines for each issue category

- Estimate resources required

- Define success metrics

- Schedule follow-up audit (3-6 months)

Audit deliverables:

Executive summary: High-level findings, critical issues, estimated impact, resource requirements.

Detailed findings report: Complete issue list with evidence, impact assessment, and recommendations.

Prioritized action plan: Issues categorized by severity, implementation timeline, resource requirements.

Quick wins list: High-impact, low-effort optimizations for immediate implementation.

Technical documentation: Specific technical fixes needed, code examples, configuration changes.

The bottom line: Professional technical SEO audits require 6-10 days for comprehensive sites, examining hundreds of factors across crawlability, indexability, performance, mobile optimization, security, and user experience. Use automated tools (Screaming Frog, Search Console) for data collection, but apply human analysis for prioritization and strategy. Audits should produce actionable roadmaps, not just lists of issues—prioritize by impact and effort, focus on critical and high-priority issues first, and plan systematic remediation over 3-6 months. Regular audits (quarterly or semi-annually) prevent technical debt accumulation and maintain technical SEO excellence.

What are the most important technical ranking factors?

While Google uses hundreds of ranking signals, certain technical factors have confirmed or strongly evidenced impact on rankings. Understanding which technical factors matter most enables strategic prioritization of optimization efforts for maximum ranking improvement.

Tier 1: Confirmed major technical ranking factors

Page speed and Core Web Vitals:

- Confirmed as ranking factors by Google

- Impact: 15-20% ranking advantage for fast pages (<2s load)

- Core Web Vitals (LCP, FID, CLS) specifically confirmed as page experience signals

- Mobile speed weighted 2.3x more heavily than desktop

- Measurable ranking improvements from optimization

HTTPS/SSL security:

- Confirmed as ranking signal by Google (since 2014)

- Impact: 5% ranking improvement on average

- Effectively mandatory (100% of top 10 results use HTTPS)

- Affects user trust and conversion beyond rankings

Mobile-friendliness:

- Confirmed as ranking factor with mobile-first indexing

- Impact: 40-60% ranking decrease for pages failing mobile usability

- Mobile version determines rankings for all devices

- Critical for mobile search visibility

Intrusive interstitials:

- Confirmed ranking penalty for intrusive mobile popups

- Impact: 8-15% ranking decrease for violating pages

- Affects mobile rankings specifically

- Easy fix with major impact

Tier 2: Strongly evidenced technical ranking factors

Site architecture and crawl efficiency:

- Impact: Pages 1-2 clicks from homepage rank 75% better than 3+ clicks

- Better internal linking improves PageRank distribution

- Proper site structure enables better indexing

- Evidence: Correlation studies, Google statements on site structure importance

Structured data/Schema markup:

- Impact: 30% higher CTR from rich results, indirect ranking benefit

- Some evidence of direct ranking benefit for certain schema types

- Enables enhanced SERP features

- Evidence: Rich result correlation with rankings, CTR improvements

URL structure:

- Impact: Clean URLs rank 15% better than parameter-heavy URLs

- Shorter, descriptive URLs improve CTR

- Better crawlability and user experience

- Evidence: Correlation studies, user behavior data

Canonical tags (duplicate content management):

- Impact: 8-15% ranking improvement from proper canonicalization

- Consolidates ranking signals to preferred versions

- Prevents duplicate content penalties

- Evidence: Case studies, Google statements on canonicalization

XML sitemaps:

- Impact: 3-5x faster indexing for new/updated content

- Better crawl efficiency for large sites

- Improved indexing coverage

- Evidence: Google Search Console data, crawl behavior studies

Tier 3: Important technical factors with indirect ranking impact

Robots.txt configuration:

- No direct ranking impact, but blocking important content prevents indexing

- Proper configuration enables efficient crawling

- Blocking resources (CSS, JS) prevents proper rendering

- Impact through enabling/preventing other factors

Redirect implementation:

- 301 redirects pass ~90-95% of link equity

- Redirect chains dilute authority and waste crawl budget

- Proper redirects prevent 404 errors

- Impact through link equity preservation and UX

Broken links and 404 errors:

- Individual 404s don't cause site-wide penalties

- Widespread broken links signal low-quality site

- Wastes crawl budget and link equity

- Negative user experience impacts engagement metrics

Server response time / TTFB:

- Affects page speed and Core Web Vitals

- Slow servers (<200ms TTFB) prevent competitive performance

- Indirect impact through speed factor

- Enables or prevents fast loading

JavaScript rendering:

- Improperly rendered JavaScript = content not indexed

- Rendering delays = slower indexing

- Pure client-side rendering = indexing challenges

- Impact through content accessibility

Prioritization framework for technical optimization:

Maximum ROI optimizations (do first):

-

HTTPS implementation (if not already HTTPS)

- Required baseline, 5% ranking improvement

- Relatively simple implementation

- One-time effort with ongoing value

-

Mobile optimization

- 40-60% ranking impact for non-mobile-friendly

- Responsive design or mobile version

- Critical for mobile-first indexing

-

Page speed optimization

- 15-20% ranking advantage for fast pages

- 35% conversion improvement

- Multiple high-impact tactics available

-

Core Web Vitals optimization

- 12% ranking improvement when passing all metrics

- 20-40% conversion improvement

- Combines with speed optimization

High-value optimizations (second phase):

-

Remove intrusive interstitials

- 8-15% ranking recovery

- Quick implementation

- Particularly important for mobile

-

Fix critical crawl/index issues

- 20-35% traffic improvement typical

- Enables indexing of previously inaccessible content

- Can have dramatic impact

-

Implement schema markup

- 30% CTR improvement from rich results

- 43% increase in rich result appearance

- Moderate implementation effort

-

Optimize site architecture

- Reduces click depth for important pages

- Improves PageRank distribution

- Better crawl efficiency

Ongoing optimizations (continuous improvement):

-

Canonical tag management

- Prevents duplicate content issues

- Consolidates ranking signals

- Ongoing monitoring needed

-

XML sitemap optimization

- Faster indexing of new content

- Better crawl efficiency

- Regular updates required

-

Internal linking optimization

- Improves authority distribution

- Better crawl coverage

- Continuous improvement opportunity

What NOT to prioritize:

Minor factors with minimal impact:

- Exact domain extension (.com vs .net vs .io) - minimal direct impact

- WWW vs non-WWW - no ranking difference if properly configured

- Breadcrumb presence - helps UX and schema, minimal direct ranking impact

- HTML validation - no confirmed ranking impact

- Meta keywords - completely ignored by Google

Technical SEO ranking factor hierarchy:

Foundation tier (must-have basics):

- HTTPS implementation

- Mobile-friendliness

- Basic crawlability (robots.txt, XML sitemaps)

- No critical technical errors

Competitive tier (needed to compete):

- Page speed optimization

- Core Web Vitals passing

- Proper site architecture

- Canonical implementation

- Schema markup

Excellence tier (competitive advantages):

- Advanced performance optimization

- Comprehensive structured data

- Perfect internal linking

- Optimal crawl budget management

- JavaScript rendering optimization

The bottom line: Focus technical SEO efforts on confirmed ranking factors first—page speed, Core Web Vitals, HTTPS, mobile-friendliness, and site architecture. These factors have measurable ranking impact and clear optimization paths. Tier 2 factors like structured data and URL structure provide secondary benefits. Don't obsess over minor factors like HTML validation or exact URL formats that have minimal impact. Prioritize based on impact and effort—quick wins like removing intrusive interstitials deliver immediate results, while comprehensive speed optimization requires more effort but provides larger returns. Technical SEO is about systematic excellence across multiple factors, not perfection in any single area.

How long does it take to see results from technical SEO improvements?

Technical SEO improvements show results at different timescales depending on the issue type, severity, and implementation complexity. Understanding realistic timelines prevents premature strategy abandonment while enabling proper expectation-setting with stakeholders.

Immediate to 1 week: Configuration and accessibility changes

HTTPS migration (if properly implemented):

- Initial fluctuations: 1-2 weeks

- Ranking stabilization: 3-4 weeks

- Full benefit recognition: 6-8 weeks

- Expected improvement: 5% ranking lift average

Removing intrusive interstitials:

- Recognition by Google: 1-2 weeks (next crawl)

- Ranking improvement: 2-4 weeks

- Expected improvement: 8-15% recovery for penalized pages

Fixing robots.txt blocks (if blocking important content):

- Googlebot discovery: 1-3 days

- Content indexing: 1-2 weeks

- Ranking impact: 2-4 weeks

- Expected improvement: 20-35% traffic increase for previously blocked content

XML sitemap submission:

- Discovery acceleration: Immediate

- Indexing improvement: 1-7 days for new URLs

- Expected improvement: 3-5x faster indexing

2-4 weeks: Crawling and indexing improvements

Canonical tag fixes:

- Google recognition: 1-2 weeks (next crawl)

- Link equity consolidation: 2-4 weeks

- Ranking stabilization: 4-8 weeks

- Expected improvement: 8-15% for affected pages

Fixing site architecture (click depth, internal linking):

- Crawl recognition: 1-2 weeks

- PageRank redistribution: 2-4 weeks

- Ranking changes: 4-8 weeks

- Expected improvement: Variable, 10-30% for previously deep pages

Removing orphaned pages (adding internal links):

- Discovery: 1-2 weeks

- Indexing: 2-4 weeks

- Initial rankings: 4-6 weeks

- Expected improvement: Previously undiscoverable pages now ranking

4-8 weeks: Performance and Core Web Vitals

Page speed optimization:

- Google measurement update: 28 days (Chrome User Experience Report)

- Search Console reflection: 4-6 weeks

- Ranking impact: 6-12 weeks

- Expected improvement: 15-20% for significantly faster pages

Core Web Vitals improvements:

- CrUX data collection: 28 days (rolling window)

- Search Console update: 4-6 weeks

- Ranking integration: 8-12 weeks

- Expected improvement: 12% for passing all metrics

Mobile optimization:

- Mobile-Friendly Test pass: Immediate upon implementation

- Mobile-first indexing update: 1-2 weeks (next crawl)

- Ranking impact: 4-8 weeks

- Expected improvement: 40-60% recovery for previously non-mobile-friendly

8-12 weeks: Complex technical changes

Site migration or redesign:

- Initial indexing of new structure: 2-4 weeks

- Ranking stabilization: 8-16 weeks

- Full transition: 12-24 weeks

- Expected outcome: Varies; properly executed prevents losses and enables improvements

JavaScript rendering optimization (SSR implementation):

- Content accessibility: Immediate (server-rendered)

- Google recognition: 1-2 weeks

- Indexing improvement: 4-8 weeks

- Ranking impact: 8-12 weeks

- Expected improvement: 40-60% for previously client-rendered content

International SEO (hreflang implementation):

- Google recognition: 2-4 weeks

- Proper targeting: 4-8 weeks

- Traffic to correct versions: 8-16 weeks

- Expected improvement: 20-40% international traffic increase

Structured data implementation:

- Rich Results Test validation: Immediate

- Rich result eligibility: 1-4 weeks (next crawl)

- Rich result appearance: 2-8 weeks (variable by SERP)

- Expected improvement: 30% CTR increase when rich results appear

Factors affecting timeline variations:

Site size:

- Small sites (<1,000 pages): Faster recognition (weeks)

- Medium sites (1,000-100,000 pages): Moderate timeline (weeks to months)

- Large sites (100,000+ pages): Slower, phased rollout (months)

Crawl frequency:

- Frequently crawled sites (daily): Faster recognition

- Infrequently crawled sites (weekly/monthly): Slower recognition

- Can accelerate by requesting indexing in Search Console

Change magnitude:

- Minor fixes (few pages): Quick impact

- Site-wide changes (all pages): Longer integration time

- Structural changes (architecture): Extended stabilization

Competitive landscape:

- Less competitive niches: Faster ranking changes

- Highly competitive niches: Slower, more gradual changes

- Technical optimization advantage compounds over time

Implementation quality:

- Proper implementation: Expected timelines

- Partial or flawed implementation: Delayed or minimal impact

- Testing and validation accelerates success

Realistic expectation timeline by implementation type:

Quick wins (results in 2-4 weeks):

- Remove robots.txt blocks

- Fix critical crawl errors

- Remove intrusive interstitials

- Submit XML sitemaps

- Fix obvious mobile usability issues

Standard optimizations (results in 4-8 weeks):

- Page speed improvements

- Canonical tag fixes

- Internal linking optimization

- Basic schema markup

- Mobile optimization completion

Complex improvements (results in 8-16 weeks):

- Core Web Vitals optimization

- Site architecture overhaul

- JavaScript rendering optimization

- Comprehensive schema implementation

- International SEO setup

Long-term projects (results in 12-24+ weeks):

- Complete site migrations

- Platform changes

- Multi-language site launches

- Enterprise technical SEO programs

Managing expectations:

Set realistic timelines: Communicate that technical SEO typically requires 4-12 weeks for measurable results, not days or weeks.

Explain phased recognition: Google discovers changes gradually through crawling, processes them through indexing, and integrates them into rankings over time.

Track early indicators: Monitor Search Console for crawl increases, indexing improvements, and technical issue resolution even before ranking changes appear.

Measure comprehensively: Track rankings, organic traffic, indexing coverage, crawl efficiency, and Core Web Vitals—not just rankings.

Plan continuous improvement: Technical SEO isn't one-time project but ongoing optimization creating compound advantages over time.

The bottom line: Technical SEO improvements show results on timelines ranging from 1 week (configuration changes like robots.txt fixes) to 12+ weeks (complex changes like Core Web Vitals optimization). Most standard technical optimizations deliver measurable results within 4-8 weeks. Set expectations for 2-3 month timelines for comprehensive technical improvement programs, with early wins in weeks 2-4 and major impact by months 2-3. Technical SEO creates compound advantages—early improvements enable later optimizations to work better, creating accelerating returns over 6-12 months. Be patient through initial implementation phases, track leading indicators (crawl stats, index coverage) before lagging indicators (rankings, traffic), and commit to sustained technical excellence rather than expecting overnight transformations.

Should I hire a technical SEO specialist or can I do it myself?

Whether to hire a technical SEO specialist or handle optimization in-house depends on site complexity, team capabilities, available time, and budget. Understanding what technical SEO requires enables informed decisions about resource allocation.

When you can likely handle technical SEO yourself:

Small, simple sites (under 1,000 pages):

- WordPress or similar CMS with SEO plugins

- Standard site structure and templates

- No complex JavaScript or custom development

- Local or small business sites

- Basic e-commerce (under 100 products)

You have technical capabilities:

- Comfortable with HTML, CSS basics

- Can learn technical concepts through documentation

- Willing to invest 5-10 hours weekly

- Have developer support for implementation if needed

Limited budget:

- Startup or small business with <$2,000/month SEO budget

- Can invest time instead of money

- Willing to learn and implement gradually

What you can handle yourself with learning:

Basic optimizations:

- HTTPS implementation (with host support)

- Mobile-friendly responsive design (themes/templates)

- Page speed basics (image optimization, caching plugins)

- XML sitemap creation and submission

- Robots.txt configuration

- Canonical tag implementation (CMS plugins)

- Basic schema markup (plugins or generators)

- Search Console setup and monitoring

Resources for self-education:

- Google Search Central documentation

- Moz Beginner's Guide to SEO

- Technical SEO courses (Coursera, Udemy)

- SEO blogs and communities

- Tool documentation (Screaming Frog, etc.)

When to hire a technical SEO specialist:

Complex sites (10,000+ pages):

- Enterprise e-commerce

- Large content sites or publishers

- Multi-language/international sites

- Complex site architectures

- Heavy JavaScript applications

Serious technical issues:

- Site has been penalized

- Major indexing problems (large % not indexed)

- Traffic dropped significantly without clear cause

- Site migration or redesign needed

- Platform change required

Time constraints:

- Don't have 5-10+ hours weekly for SEO

- Need faster results than self-learning enables

- Opportunity cost of your time exceeds specialist cost

Budget supports hiring (>$2,000/month for SEO):

- Can afford $1,500-$5,000/month for specialist

- Or $5,000-$15,000 for comprehensive audit

- ROI justifies investment

Complex technical needs:

- JavaScript rendering optimization

- Large-scale crawl optimization

- Complex international SEO

- Enterprise migrations

- Custom development requirements

What technical SEO specialists provide:

Expertise and experience:

- Deep technical knowledge from working on many sites

- Awareness of current best practices and algorithm changes

- Ability to diagnose complex issues quickly

- Experience with edge cases and unique challenges

Comprehensive audits:

- Professional audits examining 200+ factors

- Prioritized action plans based on impact

- Detailed documentation of issues and solutions

- Competitive analysis and benchmarking

Implementation support:

- Direct implementation for some tasks

- Collaboration with developers for complex changes

- Quality assurance and validation

- Ongoing monitoring and optimization

Tools and resources: