Intro

In the era of generative search, your content is no longer competing for rankings — it’s competing for ingestion.

Large Language Models (LLMs) don’t index pages the way search engines do. They ingest, embed, segment, and interpret your information as structured meaning. Once ingested, your content becomes part of the model’s:

-

reasoning

-

summaries

-

recommendations

-

comparisons

-

category definitions

-

contextual explanations

If your content is not structured for LLM-friendly ingestion, it becomes:

-

harder to parse

-

harder to segment

-

harder to embed

-

harder to reuse

-

harder to understand

-

harder to cite

-

harder to include in summaries

This article explains exactly how to structure your content and data so LLMs can ingest it cleanly — unlocking maximum generative visibility.

Part 1: What LLM-Friendly Ingestion Actually Means

Traditional search engines crawled and indexed. LLMs chunk, embed, and interpret.

LLM ingestion requires your content to be:

-

readable

-

extractable

-

semantically clean

-

structurally predictable

-

consistent in definitions

-

segmentable into discrete ideas

If your content is unstructured, messy, or meaning-dense without boundaries, the model cannot reliably convert it into embeddings — the vectorized meaning representations that power generative reasoning.

LLM-friendly ingestion = content formatted for embeddings.

Part 2: How LLMs Ingest Content (Technical Overview)

Before structuring content, you need to understand the ingestion process.

LLMs follow this pipeline:

1. Content Retrieval

The model fetches your text, either:

-

directly from the page

-

through crawling

-

via structured data

-

from cached sources

-

from citations

-

from snapshot datasets

2. Chunking

Text is broken into small, self-contained segments — usually 200–500 tokens.

Chunk quality determines:

-

clarity

-

coherence

-

semantic purity

-

reuse potential

Poor chunking → poor understanding.

3. Embedding

Each chunk is converted into a vector (a mathematical meaning signature).

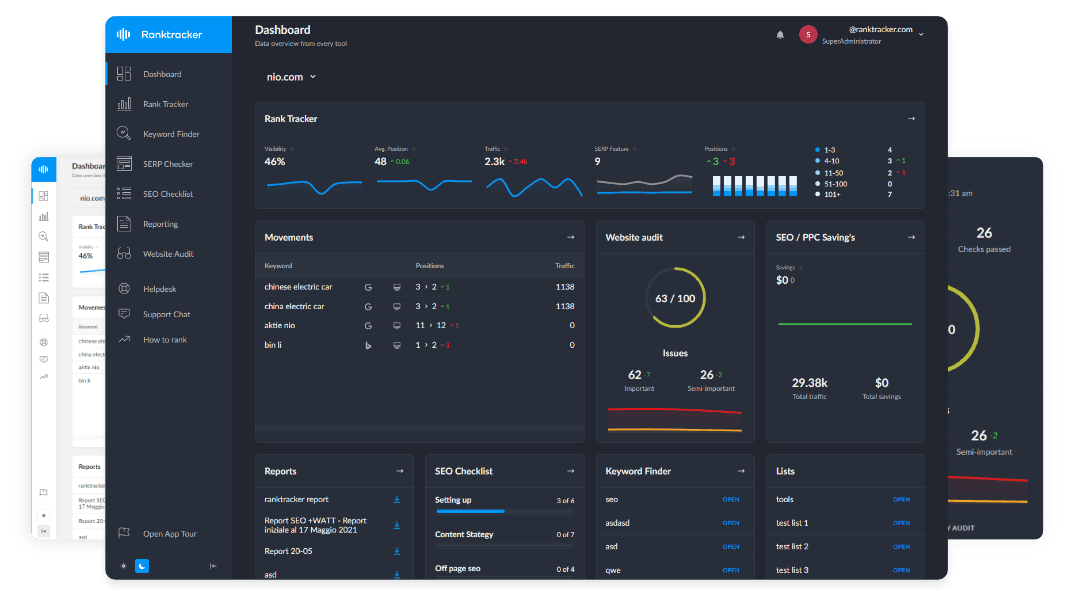

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Embedding integrity depends on:

-

clarity of topic

-

one-idea-per-chunk

-

clean formatting

-

consistent terminology

-

stable definitions

4. Semantic Alignment

The model maps your content into:

-

clusters

-

categories

-

entities

-

related concepts

-

competitor sets

-

feature groups

If your data is weakly structured, AI misclassifies your meaning.

5. Usage in Summaries

Once ingested, your content becomes eligible for:

-

generative answers

-

list recommendations

-

comparisons

-

definitions

-

examples

-

reasoning steps

Only structured, high-integrity content makes it this far.

Part 3: The Core Principles of LLM-Friendly Structure

Your content must follow five foundational principles.

Principle 1: One Idea Per Chunk

LLMs extract meaning at the chunk level. Mixing multiple concepts:

-

confuses embeddings

-

weakens semantic classification

-

reduces reuse

-

lowers generative trust

Each paragraph must express exactly one idea.

Principle 2: Stable, Canonical Definitions

Definitions must be:

-

at the top of the page

-

short

-

factual

-

unambiguous

-

consistent across pages

AI needs reliable anchor points.

Principle 3: Predictable Structural Patterns

LLMs prefer content organized into:

-

bullets

-

steps

-

lists

-

FAQs

-

summaries

-

definitions

-

subheaders

This makes chunk boundaries obvious.

Principle 4: Consistent Terminology

Terminology drift breaks ingestion:

“rank tracking tool” “SEO tool” “SEO software” “visibility analytics platform”

Pick one canonical phrase and use it everywhere.

Principle 5: Minimal Noise, Maximum Clarity

Avoid:

-

filler text

-

marketing tone

-

long intros

-

anecdotal fluff

-

metaphors

-

ambiguous language

LLMs ingest clarity, not creativity.

Part 4: The Optimal Page Structure for LLMs

Below is the recommended blueprint for every GEO-optimized page.

H1: Clear, Literal Topic Label

The title must clearly identify the topic. No poetic phrasing. No branding. No metaphor.

LLMs rely on the H1 for top-level classification.

Section 1: Canonical Definition (2–3 sentences)

This appears at the very top of the page.

It establishes:

-

meaning

-

scope

-

semantic boundaries

The model treats it as the “official answer.”

Section 2: Short-Form Extractable Summary

Provide:

-

bullets

-

short sentences

-

crisp definitions

This becomes the primary extraction block for generative summaries.

Section 3: Context & Explanation

Organize with:

-

short paragraphs

-

H2/H3 headings

-

one idea per section

Context helps LLMs model the topic.

Section 4: Examples and Classifications

LLMs rely heavily on:

-

categories

-

subtypes

-

examples

This gives them reusable structures.

Section 5: Step-by-Step Processes

Models extract steps to build:

-

instructions

-

how-tos

-

troubleshooting guidance

Steps boost generative intent visibility.

Section 6: FAQ Block (Highly Extractable)

Frequently asked questions produce excellent embeddings because:

-

each question is a self-contained topic

-

each answer is a discrete chunk

-

structure is predictable

-

intent is clear

FAQs often become the source of generative answers.

Section 7: Recency Signals

Include:

-

dates

-

updated stats

-

year-specific references

-

versioning info

LLMs heavily prefer fresh data.

Part 5: Formatting Techniques That Improve LLM Ingestion

Here are the most effective structural methods:

1. Use Short Sentences

Ideal length: 15–25 words. LLMs parse meaning more cleanly.

2. Separate Concepts With Line Breaks

This improves chunk segmentation drastically.

3. Avoid Nested Structures

Deeply nested lists confuse parsing.

4. Use H2/H3 for Semantic Boundaries

LLMs respect heading boundaries.

5. Avoid HTML Noise

Remove:

-

complex tables

-

unusual markup

-

hidden text

-

JavaScript-injected content

AI prefers stable, traditional HTML.

6. Include Definitions in Multiple Locations

Semantic redundancy increases generative adoption.

7. Add Structured Data (Schema)

Use:

-

Article

-

FAQPage

-

HowTo

-

Product

-

Organization

Schema increases ingestion confidence.

Part 6: The Common Mistakes That Break LLM Ingestion

Avoid these at all costs:

-

long, dense paragraphs

-

multiple ideas in one block

-

undefined terminology

-

inconsistent category messaging

-

marketing fluff

-

over-designed layouts

-

JS-heavy content

-

ambiguous headings

-

irrelevant anecdotes

-

contradictory phrasing

-

no canonical definition

-

outdated descriptions

Bad ingestion = no generative visibility.

Part 7: The LLM-Optimized Content Blueprint (Copy/Paste)

Here is the final blueprint you can use for any page:

1. Clear H1

Topic is stated literally.

2. Canonical Definition

Two or three sentences; fact-first.

3. Extractable Summary Block

Bullets or short sentences.

4. Context Section

Short paragraphs, one idea each.

5. Classification Section

Types, categories, variations.

6. Examples Section

Specific, concise examples.

7. Steps Section

Instructional sequences.

8. FAQ Section

Short Q&A entries.

9. Recency Indicators

Updated facts and time signals.

10. Schema

Correctly aligned to page intent.

This structure ensures maximum reuse, clarity, and generative presence.

Conclusion: Structured Data Is the New Fuel for Generative Visibility

Search engines once rewarded volume and backlinks. Generative engines reward structure and clarity.

If you want maximum generative visibility, your content must be:

-

chunkable

-

extractable

-

canonical

-

consistent

-

semantically clean

-

structurally predictable

-

format-stable

-

definition-driven

-

evidence-rich

LLMs cannot reuse content they cannot ingest. They cannot ingest content that is unstructured.

Structure your data correctly, and AI will:

-

understand you

-

classify you

-

trust you

-

reuse you

-

cite you

-

include you

In the GEO era, structured content is not a formatting preference — it is a visibility requirement.