Intro

Large Language Models (LLMs) are now shaping how the world understands brands — not through search results, but through generated answers.

This means:

✔ ChatGPT can invent facts about your business

✔ Gemini can misstate your features

✔ Copilot can confuse you with a competitor

✔ Perplexity can cite outdated information

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

✔ Claude can merge unrelated entities

✔ Apple Intelligence can personalize inaccurate summaries

This is the new reputational threat:

Hallucinated content.

LLMs hallucinate because they:

-

fill in missing data

-

guess connections

-

rely on outdated sources

-

conflate similar entities

-

borrow context from competitors

-

overgeneralize when uncertain

-

blend ambiguous brand identities

If your brand isn’t represented clearly, consistently, and accurately across AI systems, LLMs will generate answers that misinform users and damage trust.

This guide explains how hallucinations happen, how they can harm your brand, and the exact steps required to prevent — and correct — AI-driven reputation risks.

1. Why Hallucinations Are a Major Reputation Risk

LLMs aren’t search engines. They generate synthetic answers.

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

This means hallucinations can create:

1. False features

“Ranktracker includes AI-driven backlink acquisition” (You never said this)

2. Incorrect pricing

“Ranktracker’s plans start at $199/month” (completely fabricated)

3. Misattributed reviews

“Mediocre customer satisfaction” (pulled from unrelated sentiment)

4. Wrong category placement

“Ranktracker is a digital marketing agency” (incorrect entity classification)

5. Blended brand identities

Models may merge:

-

Ranktracker

-

Rank Tracker plugins

-

RankChecker tools

-

Similar SaaS brands

6. Outdated information

LLMs often use:

-

2019 reviews

-

old feature lists

-

archived pages

7. Invented negatives

Models sometimes invent:

-

scandals

-

lawsuits

-

acquisitions

-

downtime

-

breaches

These can cause real-world harm — decreasing conversions, distorting perception, and misleading potential customers.

2. Why LLMs Hallucinate About Brands (The Root Causes)

Across thousands of hallucination cases, four core reasons emerge.

1. Missing or Weak Brand Data

If your brand identity is unclear, AI improvises.

Indicators of weak data:

-

inconsistent descriptions

-

outdated features

-

thin pages

-

poor structured data

-

inconsistent naming

-

contradictory external profiles

LLMs fill the gaps with guesses.

2. Entity Confusion (Semantic Overlap)

If your name resembles:

✔ a generic phrase

✔ an older product

✔ a discontinued tool

✔ a competitor

✔ a plugin

AI merges the entities.

Example: Ranktracker vs “Rank Tracker plugin” vs “Rank tracking platform.”

3. Low External Consensus

If the web doesn’t consistently describe your brand, models assume uncertainty.

Factors:

-

weak backlink profile

-

missing Wikidata item

-

contradictory PR

-

outdated third-party profiles

-

old scraped content

4. Model Bias Toward High-Authority Competitors

If competitors have cleaner data, AI relies on their descriptions to fill in the gaps.

This causes:

-

feature theft

-

wrong comparisons

-

competitor-weighted summaries

-

brand overshadowing

3. The Forms of Hallucination That Threaten Brand Reputation

Hallucinations come in five main varieties — each damaging in different ways.

A. Factual Hallucinations

AI invents:

✔ founders

✔ locations

✔ features

✔ pricing

✔ product capabilities

✔ integrations

✔ company size

✔ launch dates

These misrepresent your brand.

B. Sentiment Hallucinations

AI infers:

✔ negative sentiment

✔ inaccurate review stats

✔ exaggerated complaints

✔ invented customer dissatisfaction

This can suppress conversion rates.

C. Comparison Hallucinations

LLMs may:

✔ place you below competitors

✔ exaggerate competitor capabilities

✔ misstate differentiation

✔ produce inaccurate rankings

This reduces recommendation visibility.

D. Historical Hallucinations

Models may incorrectly reference:

✔ old owners

✔ old pricing

✔ discontinued tools

✔ outdated designs

✔ incorrect release years

This often happens due to archive crawling.

E. Legal or Compliance Hallucinations

Most dangerous:

✔ claims of regulatory violations

✔ privacy breaches

✔ lawsuits

✔ certifications you don’t hold

✔ compliance you do hold but AI misstates

These demand urgent correction.

4. The Reputation Risk Management Framework (H-9)

Use this nine-pillar system to eliminate hallucination risk.

Pillar 1 — Create a Canonical Brand Identity

Define your brand in one authoritative sentence.

Use it everywhere:

✔ homepage

✔ About page

✔ Schema

✔ Wikidata

✔ PR

✔ directories

✔ documentation

Consistency reduces guesswork.

Pillar 2 — Strengthen Structured Data

Use Schema:

✔ Organization

✔ Product

✔ SoftwareApplication

✔ FAQPage

✔ Review

✔ Person (author)

✔ WebPage

LLMs rely heavily on structured data to anchor brand facts.

Pillar 3 — Build a Clean, Complete Wikidata Item

Wikidata is one of the strongest anti-hallucination anchors.

Update:

✔ description

✔ aliases

✔ founders

✔ features

✔ categories

✔ sameAs links

✔ identifiers

Weak Wikidata = high hallucination risk.

Pillar 4 — Clean Your Full Web Footprint

Audit:

-

SaaS directories

-

old reviews

-

PR from 2018–2021

-

outdated screenshots

-

competitor-linked content

-

legacy product pages

-

abandoned subdomains

-

scraped content

Remove or update everything that misrepresents your brand.

Pillar 5 — Publish High-Authority Factual Pages

Create AI-friendly pages with:

✔ feature lists

✔ pricing breakdowns

✔ Q&A blocks

✔ comparisons

✔ definitions

✔ documentation

These provide “ground truth” for models.

Pillar 6 — Monitor AI Platforms for Misrepresentations

Check:

✔ ChatGPT

✔ Gemini

✔ Copilot

✔ Claude

✔ Perplexity

✔ Apple Intelligence

✔ LLaMA enterprise copilots

Look for:

-

invented features

-

outdated details

-

competitor bias

-

wrong pricing

-

legal statements

-

false negatives

Pillar 7 — Submit Corrections Using Official Channels

Platforms now support correction:

✔ OpenAI Model Correction

✔ Perplexity “Incorrect Source” reporting

✔ Google AI Overview feedback

✔ Bing Copilot correction portal

✔ Anhropic Safety Submissions

Corrections significantly reduce future hallucinations.

Pillar 8 — Strengthen External Consensus (Backlink Intelligence)

LLMs trust consensus across the web.

Use:

-

Ranktracker Backlink Checker

-

Backlink Monitor

High-quality backlinks stabilize your entity.

Pillar 9 — Maintain Recency Signals

LLMs love recency.

Update:

✔ features

✔ pricing

�✔ changelogs

✔ documentation

✔ screenshots

✔ blog posts

✔ About page

Recency prevents outdated hallucinations.

5. Advanced Hallucination Mitigation (LLMO Techniques)

For maximum protection:

1. Issue an Official Brand Fact Sheet

A dedicated page with:

✔ brand summary

✔ founders

✔ product features

✔ pricing

✔ integrations

✔ category

✔ FAQs

Perfect for RAG ingestion.

2. Publish Comparison Pages

This prevents:

✔ competitor bias

✔ inaccurate comparisons

✔ misclassification

You guide the narrative.

3. Use Strong Entity Repetition

Repeat:

✔ brand name

✔ product name

✔ category

✔ feature terms

✔ differentiators

Entities stick when repeated with consistency.

4. Use Clear Disambiguation

If your name overlaps with another phrase:

✔ create a disambiguation section

✔ use Schema “disambiguatingDescription”

✔ clarify brand uniqueness

Helps prevent entity merging.

5. Monitor Sentiment Drift

Track:

✔ sentiment summaries

✔ hallucinated review counts

✔ inferred user satisfaction

Correct them proactively.

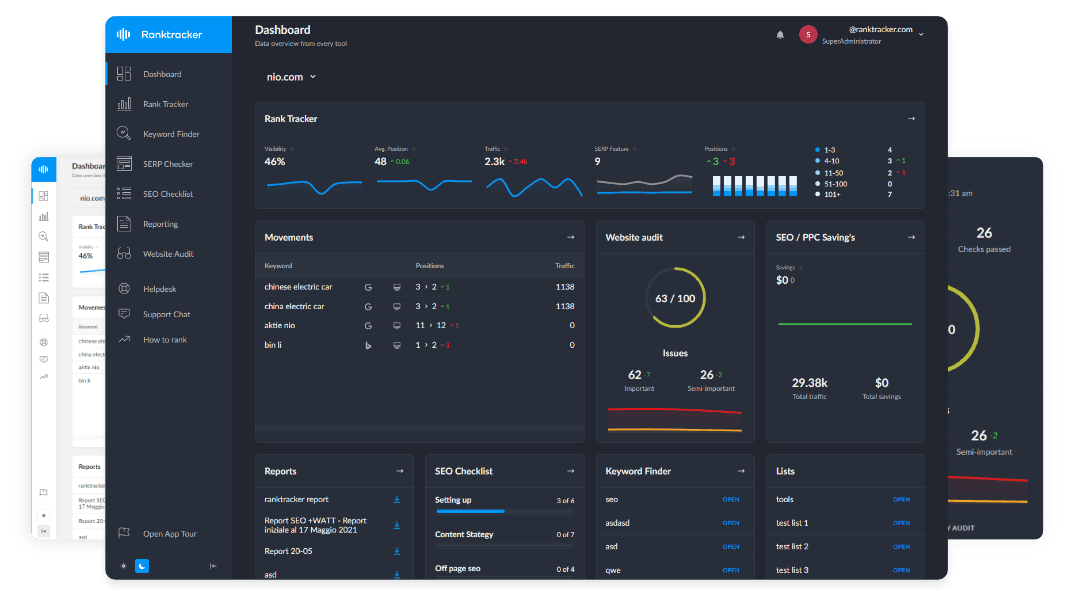

6. How Ranktracker Helps Prevent Hallucinated AI Damage

Ranktracker provides the infrastructure to stabilize brand identity:

Web Audit

Reveals:

✔ schema gaps

✔ inconsistent metadata

✔ accessibility issues

✔ outdated information

Essential for reducing hallucination risk.

SERP Checker

Shows entity relationships and competitor adjacency.

Backlink Checker & Monitor

Strengthens external consensus and reduces confusion.

Keyword Finder

Builds factual clusters AI models can rely on.

AI Article Writer

Produces structured, consistent, machine-readable content that LLMs interpret correctly.

Together, these tools form the foundation of hallucination-proof brand visibility.

**Final Thought:

Controlling AI Hallucinations Is the New Frontier of Reputation Management**

Your reputation is no longer shaped only by:

✔ reviews

✔ press

✔ social media

✔ search results

It is shaped by AI-generated content — answers created about your brand that you did not write.

If AI misrepresents you, users believe the model, not your website.

To protect your brand, you must:

✔ anchor your entity

✔ correct misstatements

✔ clean your footprint

✔ build structured clarity

✔ strengthen external signals

✔ maintain recency

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

✔ monitor constantly

This is the new reality of AI-driven discovery.

If you don’t define your brand for the model, the model will define your brand for you.

And you may not like the result.