Intro

SEOs deploy technical features that enable search engines to accomplish their site crawling processes, content indexation, and motor ratings. Your content performance alone will not ensure website ranking potential if technical SEO problems exist. Web appearance and performance decline significantly when search engines encounter technical issues, including alarming loading speed, site indexation mistakes, content duplicates, and nonfunctional links. Virtual success demands analytical problem resolution procedures because they directly improve rankings of search engine results pages (SERP).

The guide presents leading technical SEO problems that disrupt rankings while explaining solutions for fixing them. Strategically enhancing website features leads to better performance, organic traffic, and user engagement. Let’s dive in!

1. Slow Website Speed

The load time of every web page has become an essential Google ranking factor. It triggers user frustration, leading to immediate site departure by bouncing. Users experience quick performance benefits from fast websites; thus, Google ranks sites based on speed performance. All web performance degradation stems from three main factors: suboptimal image management, excessive HTTP requests, and server operation flaws. The performance assessment tools from Google PageSpeed Insights and GTmetrix identify specific speed-related issues and then supply optimization steps for resolution.

Use TinyPNG or ImageOptim to compress your images to enhance website speed. The number of HTTP requests can be lowered by removing scripts, plugins, and third-party integrations. System performance receives an advantage through the combined deployment of content distribution networks caching solutions and lazy loading approach implementation. Both user-friendly and search engine optimization require ongoing speed metrics analysis for continuous optimization.

2. Broken Links and 404 Errors

"Both users and search engines generate different impressions from broken links since humans face annoying user experiences, yet algorithms view the issues as insufficient maintenance of websites. A 404 error page occurrence creates navigation problems for users and search engine bots, resulting in rankings degradation. The leading causes of broken links include pages disappearing, incorrect web addresses, and changes in site navigation when redirects are not correctly implemented." reported B. Flynn, Manager at Homefield IT

Google Search Console and Ahrefs plus Screaming Frog are tools to find 404 errors and their identification and repair procedures. The discovered errors must lead to direct updates of correct page destinations or the implementation of 301 redirect functions. Search engines reward websites with superior experience and rankings when all internal and external links function correctly.

3. Duplicate Content Issues

Search engines have difficulty processing content with duplicates, so they might penalize the page through ranking reductions. This issue has been caused by complex search engine ranking decisions, which have led to different URLs that contain identical content. Three primary sources of duplicate content issues are URL variations and session IDs, while printer-friendly pages also create this problem.

To choose your primary URL, you should submit each page to search engines using canonical tags (rel="canonical"). By correctly implementing 301 redirects, a single preferred URL should contain duplicate content. Frequent site checks for duplicate content require using Siteliner and Copyscape auditing tools to identify duplicate content, and you should use them together.

4. Improper Indexing

"Your site pages will become invisible from search results when search engines fail to index them correctly, thus resulting in traffic loss. The incorrect implementation of robots.txt instructions and metadata that blocks indexing and accidental use of noindex tags on core pages causes this indexing problem," explained Dean Lee, Head of Marketing at Sealions

So that you know, your robots.txt file does not block necessary web pages. Website owners can use Google Search Console to perform URL inspection and submit URLs for indexing purposes. A review and update of meta-required tags (<meta name="robots" content="noindex">) will stop unintended deindexing of important website content.

5. Poor Mobile Usability

Mobile-friendly websites must be prioritized since Google indexes websites based on their mobile interfaces. Mobile device optimization plays a crucial role in website performance, as sites without proper mobile support rank worse with search engines and produce inferior user experiences. Your website may suffer from several issues, from unresponsive design, small text, and closeness between elements.

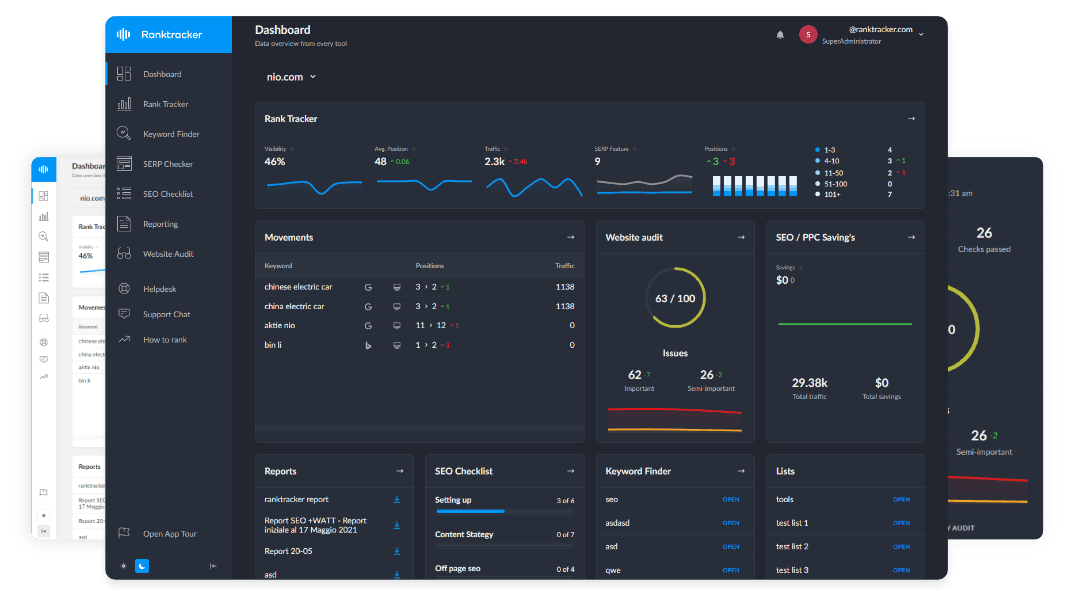

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

"Mobile usability issues will be evident during a Google Mobile-Friendly Test evaluation. A responsive design framework should be implemented to address website issues related to different screen sizes and provide smooth navigation. Touch elements require optimization, while fonts should be increased in size, and interstitials need to be removed to maintain a quality user experience." stated Gerrid Smith, Founder & CEO of Fortress Growth

6. Insecure Website (No HTTPS)

Users accessing Google Search find secure websites (identified through HTTPS) that rank higher than websites using regular HTTP. Website visitors will see a dangerous security alert when you lack an SSL certificate, reducing their trust and leading them to leave your site. A trusted SSL certificate provider enables you to secure your website with this certificate. Users will transition from HTTP to HTTPS through a 301 redirect system that must encompass all HTTP URLs. Modern websites must continue linking their pages internally while fixing mixed content to maintain safe online access.

7. Incorrect Use of Hreflang Tags

“Implementing Hreflang tags by web properties serving different language groups and geographic regions should be done without errors” said Serbay Arda Ayzit Founder of InsightusConsulting. Improper use of Hreflang tags creates difficulties for search engines when they try to index content properly, producing duplicate content suspicions. Search engines would not deliver suitable content to users since proper language or region-specific pages stay unidentified. Users primarily fail with hreflang implementation due to incorrect country/language selection, self-referential tags, and conflicting directions. After failure to determine the correct language pages, the search engine faces two potential issues: wrong ranking of inappropriate pages or complete display failure of these pages.

For hreflang problem resolution, a proper hreflang tag set must be included within each page's head element. Pages require correct usage of International Organization for Standardization codes, where en-us stands for US English and fr-ca represents French Canadian. Every page involves hreflang markup, which contains references to its URL. The Hreflang Tag Tester developed by Google enables users to verify their implementation while preventing ranking issues that might occur from implementation conflicts.

8. Missing or Poor XML Sitemap

An XML sitemap helps search engines understand your site organization better and improves page indexing abilities. A broken sitemap structure or a missing or outdated sitemap prevents search engines from locating new pages, leading to content non-appearance on search engine results pages. Excessive mistakes, including broken links, non-canonical URLs, and additional unneeded pages in a sitemap, will result in search engine confusion, which deteriorates your SEO outcomes.

"The solution to this issue requires producing an XML sitemap using tools such as Yoast SEO, Screaming Frog, and Google XML Sitemaps. The sitemap must contain vital pages and remove unneeded duplicates, thank-you pages, and administrative sections. After updating your sitemap feed, you should submit it to Google Search Console when creating a new page for dynamic content refresh. Regular sitemap inspections help search engines display your website's correct structure." Carl Panepinto, Marketing Director at Manhattan Flood Restoration, pointed out.

9. Poor Internal Linking Structure

Page discovery, authoritative value distribution among pages, and site navigation improvement result from effective internal linking practices in search engine optimization. Weak internal linking structures, such as orphan pages without connections or broken links overlinking a single page, will negatively affect search rankings. Finding new content becomes inefficient for search engines when they cannot understand the content relationships because of an unclear logical hierarchy in the link structure.

"Detecting orphan pages and broken links requires an audit performed through Screaming Frog or Ahrefs software. A deliberate linking method must exist to provide essential pages with sufficient pertinent internal connections originating from worthwhile material pages. You can place descriptive text within your hyperlinks to optimize keyword connection and keep links to a minimum on single pages, or link dilution might happen. A strategy for linking between different pages on a website helps enhance SEO performance and better user engagement." noted Hassan Usmani, Link Building Agency Owner of Esteem Links

10. Lack of Structured Data (Schema Markup)

Embedding schema markup, known as structured data, within your content enables search engines to gather better information to display rich search results snippets. Without structured data, your site will omit star ratings, FAQs, and event information while showing only product specifications. User clicks will decrease when structured data is not implemented because website content will become less visible to search engine customers.

To avoid this problem, Google recommends applying a JSON-LD schema markup. Users can generate appropriate markup codes for products, reviews, events, and articles through the Structured Data Markup Helper from Google or Schema.org tools. These platforms also allow code creation. Nothing works better than Google's Rich Results Test to check your data structure and Google Search Console for error tracking. Schematic markup enhances your web page's ranking in search results, producing higher traffic volumes.

11. Inefficient Use of Canonical Tags

Using "rel="canonical"" tags fixes duplicate content because they determine which page functions as the primary version. Search engines experience confusion about canonical tags when the tags are misused because multiple pages point to incorrect URL self-references on all pages or when necessary canonical tags are absent. When canonical tags are misused, search engine rankings are decreased.

Sitebulb or Screaming Frog tools enable users to detect canonical mistakes during site audits, allowing people to address these issues. Users should follow canonical tags leading to the primary webpage version from every web page, and duplicate content requirements need proper joint processing. Canonical tags should only be applied to paginated pages in specified situations but need to be absent from non-relevant places where search engines should redirect HTTPS links to HTTPS versions of a page. Appropriate canonical implementation enables search engines to find valuable content while helping them understand website organization.

12. Excessive Redirect Chains and Loops

Website users and search engines require redirects to find the correct URL when pages get moved or deleted from the website. Several redirects that create circular loops between pages result in delayed crawling, decreasing page speed, and causing indexation difficulties. The primary source of these problems occurs when users migrate sites incorrectly, restructure their site organization, or when plugin conflicts arise.

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

"Screaming Frog and Google Search Console should be used to check and fix redirect chains and loops. Operators must create a single 301 redirect to establish direct access for users to their end location. Web admins should resolve redirect loops by wiping out conflicting redirects while validating that all redirects direct to current live pages. Using straightforward and important redirect links improves web page loading time and site crawling speed." says Nick Oberheiden, Founder at Oberheiden P.C.

13. Inconsistent URL Structures

Brand search engines face several challenges with unclear website URL structures, which lead to three significant problems: indexing performance degradation, content duplication detection, and user experience degradation. Search engines experience confusion because users face URL problems from trailing slashes (/), uppercase-lowercase differences, URL session IDs, and excessive parameters, which can lead to lower search rankings.

The unique URL format in PDF documents provides a standardized website URL structure. Every URL should use lowercase letters, and the website must follow uniform slash rules and remove parameters that generate duplicate content. Users should be redirected to outdated URLs through 301 procedures before the linking pages are updated between them. Site navigation improves when URL structure is consistent, and search engines better understand your site arrangement.

14. Unoptimized Image SEO

Web appearance and user interaction function exclusively through image content. Poor image quality leads to website performance deterioration, which harms search engine rankings. Oversized image files, no alt text, and inappropriate image formats reduce web SEO performance and accessibility. Websites that use nondescriptive file names and inadequate data organization lose their images from Google Images search engine results.

"To address SEO problems, web administrators must utilize TinyPNG and ImageOptim to reduce image file sizes while preserving the visual quality of their pictures. Searching through meaning-rich keywords is the appropriate method to describe file format parameters instead of using standard naming conventions like "IMG001.jpg." SEO performance increases with accessibility when every image obtains its necessary alt text. WebP next-gen allows you to take pictures faster, enabling lazy loading as an acceleration feature. Proper image optimization improves website speed, and search results can find the content more efficiently." asserts Gemma Hughes, Global Marketing Manager at iGrafx

Conclusion

Technically optimized search engine optimization allows search engines to execute appropriate crawling functionality while processing website content and determining rankings. ující administrators must handle technical SEO problems because any negligence will trigger search engine rank issues, leading to decreased site visits and user engagement. Fixing broken links, optimizing indexing, and duplicating content can improve site speed and visibility.

SEO success permanently benefits from two primary elements: site audit quality, structured interlinking and schema markups, and well-optimized website URLs. Actively solving technical SEO problems enables sustained top rankings while improving user experience. Tracking your site performance allows for technical excellence and continuous visitor growth beyond market competitors.