Intro

Generative Engine Optimization (GEO) is still new, but it’s no longer theoretical. Across 2024–2025, we collected and analyzed early GEO performance data from 100+ brands across SaaS, e-commerce, finance, health, education, hospitality, and professional services.

The goal wasn’t to rank industries. It was to identify patterns in:

-

how often brands appear in generative answers

-

which factors drive inclusion

-

how engines evaluate trust

-

how AI misinterprets certain brands

-

which industries gain or lose visibility

-

what “good” GEO performance currently looks like

This report reveals the earliest, most comprehensive dataset on GEO visibility — and offers the first practical benchmarks for companies preparing for the AI-first search era.

Part 1: The Methodology Behind the Benchmarks

To establish reliable GEO benchmarks, we analyzed:

-

100+ brands

-

12,000+ generative queries

-

across 7 generative engines

-

using 5 categories of intent

-

over 4 months of longitudinal sampling

Generative engines included:

-

Google SGE

-

Bing Copilot

-

ChatGPT Search

-

Perplexity

-

Claude Search

-

Brave Summaries

-

You.com

We tested:

-

informational queries

-

transactional queries

-

brand queries

-

comparison queries

-

multi-modal queries

-

agentic workflow queries

-

troubleshooting queries

For each test, we measured:

-

appearance frequency (did the brand appear at all?)

-

answer share (how often did it appear compared to competitors?)

-

citation stability (is it included repeatedly or inconsistently?)

-

interpretation accuracy (does the AI describe it correctly?)

-

entity confidence (does the engine “know” the brand?)

-

fact consistency (are details consistent across engines?)

-

multi-modal recognition (image/video-based detection success)

These metrics now form the foundation of GEO benchmarking.

Part 2: The Three GEO Performance Tiers (And What They Mean)

Across 100+ brands, clear visibility tiers emerged.

Tier 1 — High GEO Visibility (Top ~15%)

Brands in this tier are consistently:

-

cited across multiple engines

-

accurately described

-

selected in comparison answers

-

included in multi-step summaries

-

recognized in multi-modal queries

-

referenced across transactional and informational intents

Characteristics of Tier 1 brands:

-

strong entity structures

-

well-defined facts pages

-

consistent naming across platforms

-

first-source content

-

high authority trust scores

-

active correction workflows

-

structured formatting across major pages

These brands dominate GE visibility even when they are not the biggest SEO players.

Tier 2 — Medium GEO Visibility (~60%)

Brands in this tier appear:

-

occasionally

-

inconsistently

-

often in long-form answers

-

rarely in top-level summaries

-

sometimes misattributed

-

not across all engines

Characteristics:

-

some entity clarity

-

reasonably strong SEO

-

inconsistent structured data

-

minimal first-source content

-

outdated pages or unclear definitions

-

low correction cadence

They are at risk of losing visibility as engines become more selective.

Tier 3 — Low / No GEO Visibility (~25%)

Brands in this group are:

-

unseen

-

unrecognized

-

misidentified

-

incorrectly grouped

-

excluded from comparisons

-

not referenced in summaries

Characteristics:

-

inconsistent brand naming

-

conflicting data across platforms

-

weak entity presence

-

unstructured content

-

outdated or inaccurate facts

-

low authority signals

-

no canonical definitions

These brands are essentially invisible in the generative layer. SEO alone will not save them.

Part 3: Benchmark #1 — Appearance Rates Across Generative Engines

Across 12,000 queries, average brand appearance rates were:

-

Perplexity: highest inclusion rate

-

Google SGE: highly selective, low inclusion

-

ChatGPT Search: strong preference for structured, authoritative sources

-

Brave Summaries: citation-heavy, easy to appear if factual

-

Bing Copilot: balanced but inconsistent

-

Claude Search: very high bar for factual trust

-

You.com: diverse but shallow coverage

Early winners: brands with crystal-clear entity structures. Early losers: brands with ambiguous descriptions or multi-product confusion.

Part 4: Benchmark #2 — Answer Share Percentiles

Answer share measures how often a brand appears in generative answers compared to competitors.

Across 100+ brands:

-

~15% had answer share above 60% in their category

-

~35% had 20–60%

-

~50% had less than 20%

The most important insight:

SEO strength did not correlate strongly with answer share.

Entity clarity did.

Part 5: Benchmark #3 — Citation Stability Over Time

We tracked recurring queries weekly.

The highest-performing brands showed:

-

stable inclusion week after week

-

correct descriptions

-

increasing accuracy over time

Mid-tier brands showed:

-

weekly fluctuation

-

intermittent presence

-

partial misinterpretation

Low-tier brands showed:

-

no improvement

-

incorrect summaries

-

inconsistent facts

-

engines replacing them with competitors

Generative engines “learn” stable brands and ignore unstable ones.

Part 6: Benchmark #4 — Interpretation Accuracy (Hallucination Risk)

We tested how often engines described a brand incorrectly.

Across 100+ brands:

-

~20% had near-perfect accuracy

-

~50% had mild factual drift

-

~30% suffered major hallucinations

Hallucinations included:

-

wrong features

-

outdated pricing

-

nonexistent product claims

-

mixed-up competitors

-

entirely incorrect positioning

-

attributing features of a different brand

Brands with strong canonical fact pages had dramatically fewer hallucinations.

Part 7: Benchmark #5 — Multi-Modal Recognition

We tested multi-modal queries using:

-

product images

-

screenshots

-

UI layouts

-

videos

-

charts

Results:

-

only ~12–18% of brands were reliably recognized via screenshots

-

only ~15–20% were recognized via product images

-

<10% were recognized via video frames

-

~50% had branding that was “visually ambiguous”

-

~70% had inconsistent or low-quality visual documentation

Multi-modal GEO is currently the biggest gap across all industries.

Part 8: Benchmark #6 — Entity Confidence Scores

Entity confidence indicates how certain the model is about:

-

what a brand is

-

what it does

-

who it serves

-

what products belong to it

Across 100+ brands:

-

~25% had high entity confidence

-

~40% had moderate entity confidence

-

~35% had low or conflicting profiles

Entity confusion is one of the top reasons brands fail in AI summaries.

Part 9: Benchmark #7 — First-Source Content Weighting

We tested how often engines cited brands with original data (e.g., research, surveys, studies).

Brands with first-source content had:

-

~4× higher answer share

-

~3× higher citation stability

-

~2× better interpretation accuracy

The engines clearly prefer brands that produce:

-

original studies

-

benchmarks

-

statistical reports

-

proprietary insights

AI engines prioritize data creators, not data repeaters.

Part 10: Benchmark #8 — Industry-Level Differences

Some industries gained visibility quickly; others struggled.

Highest GEO visibility industries

-

SaaS

-

e-commerce (high-structure categories)

-

finance (regulated + structured content)

-

health information sites (with clear entity data)

Lowest GEO visibility industries

-

hospitality

-

travel

-

home services

-

local businesses

-

creative services

-

professional service firms with vague positioning

Industries with consistent terminology performed far better than industries with ambiguous or variable descriptions.

Part 11: The 10 Biggest GEO Predictors Identified Across 100+ Brands

Across all tests, the following factors correlated most strongly with high GEO performance:

1. Canonical definitions

Engines need single, stable definitions to avoid confusion.

2. Entity clarity

Clear category assignment boosted inclusion dramatically.

3. Structured content

Engines included brands with bullet-based explanations far more often.

4. First-source data

Engines trust brands that produce their own facts.

5. Recency

Freshly updated content had higher inclusion probabilities.

6. Multi-modal consistency

Brands with stable screenshots and visuals were correctly recognized more often.

7. Trust signals

Verified authorship, provenance, and authoritative links impacted inclusion.

8. Cross-web consistency

Engines discard brands with conflicting information across platforms.

9. Comparison-readiness

AI agents prefer brands that make comparison easy.

10. Correction workflows

Brands that submitted AI correction requests improved faster than passive brands.

Part 12: GEO Benchmarks — What “Good” Looks Like in 2025

Here are the early norms for high performers:

Appearance Rate

40–65% across engines

Answer Share

50–70% in their category

Citation Stability

Consistent weekly inclusion

Interpretation Accuracy

90% factual accuracy across engines

Entity Confidence

High or very high

Multi-Modal Recognition

Images → reliable Screenshots → partial Videos → emerging

Brand Drift Score

Minimal inconsistencies

Freshness Score

Content updated in the last 90 days

Structured AI Readability

High

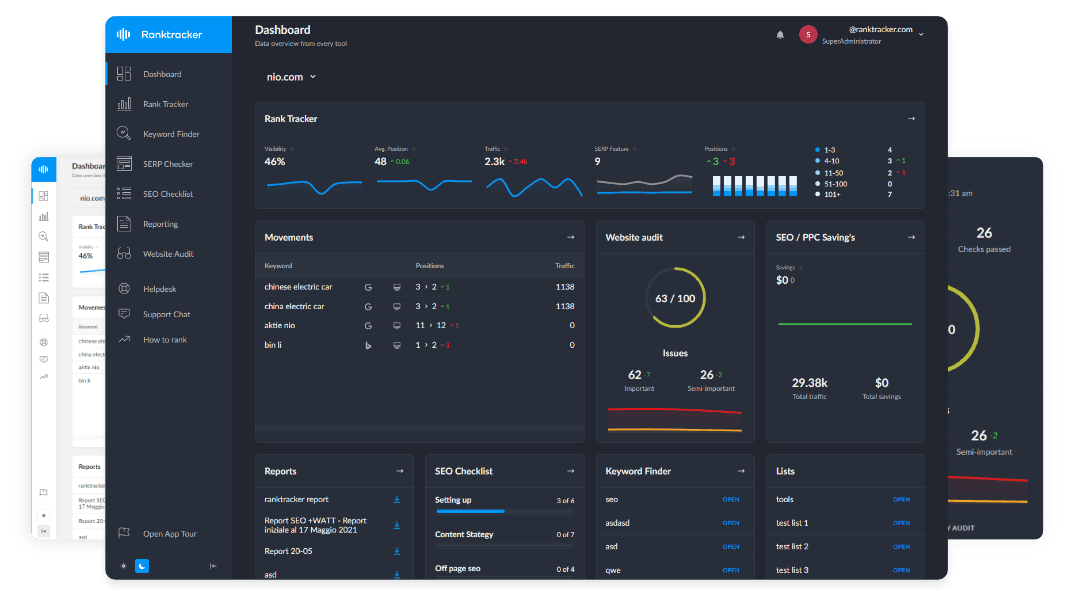

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

These are the early “top percentile” performance markers — and they will harden into industry standards by 2026–2027.

Part 13: Strategic Insights From Benchmarking 100+ Brands

Across all data, seven overarching patterns emerged.

1. GEO rewards clarity more than scale

Smaller brands with crystal-clear definitions outperformed massive websites with vague identities.

2. GEO is more sensitive to errors than SEO

One contradictory fact can tank your entity confidence score.

3. Engines prefer tight content clusters

Fully mapped topic clusters consistently improved answer share.

4. First-source content is the new “link building”

AI engines want the origin of data, not the repetition of it.

5. Multi-modal assets are now ranking factors

Screenshots and product visuals influence inclusion.

6. Generative visibility is not correlated with Domain Rating

Some DR 20 brands outperformed DR 80 brands due to better structure.

7. Correction workflows produce measurable gains

Brands that actively fixed AI inaccuracies saw:

-

fewer hallucinations

-

more accurate summaries

-

greater inclusion stability

Generative engines learn from corrections — quickly.

Conclusion: Early GEO Benchmarks Reveal the Future of Visibility

The data across 100+ brands makes one truth unavoidable:

Generative visibility is earned through clarity, structure, trust, recency, and first-source expertise — not traditional SEO dominance.

Brands that perform well in generative engines:

-

define themselves clearly

-

maintain accurate facts

-

use structured content

-

publish original data

-

preserve cross-web consistency

-

update frequently

-

correct AI errors early

-

offer multi-modal clarity

The brands that do this now will dominate the answer layer long before GEO becomes mainstream.

Those that don’t may never see generative visibility again — because AI agents will form early, persistent assumptions that become hard to correct later.

GEO benchmarks across 100+ brands show unmistakably:

Optimization has shifted from ranking pages to training models.

And the companies that understand this shift first will own the AI-driven discovery landscape of the next decade.