Intro

Search engines reward clarity and originality. However, some websites, despite knowing this, sabotage themselves through content duplication.

Believe it or not, but even small overlaps can weaken performance and delay visibility.

Therefore, if your page ever fails to appear where it should, just know that there must be some problem with how search engines process and prioritize your site’s content.

Thus, as a content creator, it's your duty to learn the right strategies to effectively protect your site rankings, improve visibility, and give users a better experience.

Don't worry, it's not that hard. All you need is to thoroughly understand the risks and apply proven fixes to ensure your content always works for you, not against you, in building authority and attracting consistent traffic.

How Can Duplicate Content Harm the SEO Rankings?

Here are some of the most common yet harmful ways duplicated content can affect your SEO rankings.

So keep reading to learn how.

1. Crawling and Indexing Issues

Search engines crawl through websites to discover and index content.

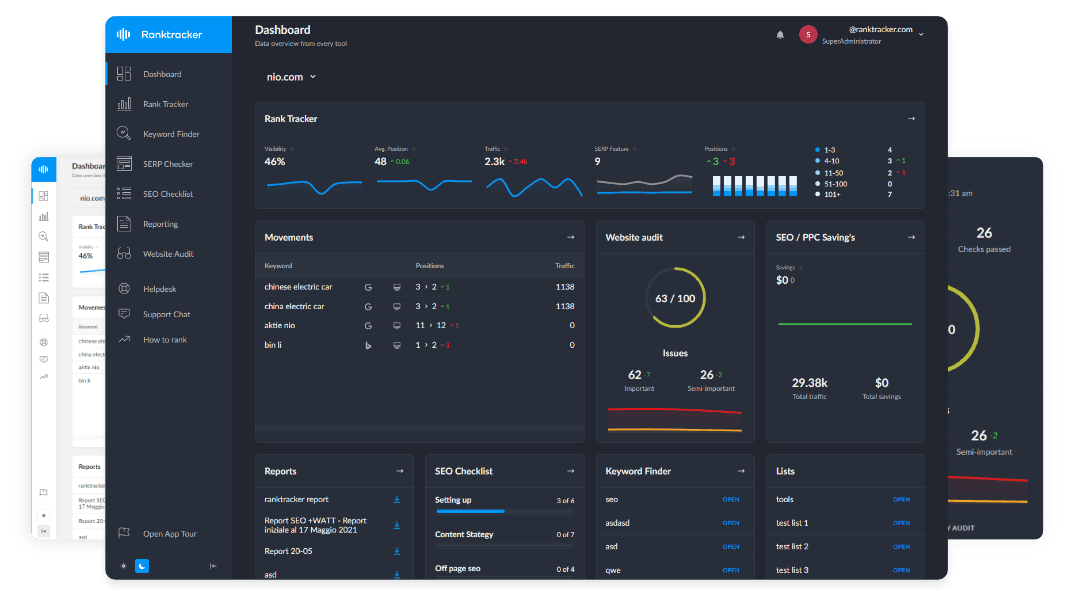

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

However, if your site has multiple duplicate pages, it will confuse the crawler and waste its crawl budget by repeatedly crawling the same versions.

Another major problem here is that when the crawlers encounter multiple versions of the same content, they struggle to decide which page deserves priority.

So, consequently, sometimes the relevant pages don't get indexed. Moreover, due to the duplication overload, the new and valuable content also needs to wait for hours or sometimes days since the crawler remains busy sorting your duplicate pages.

Thus, this creates a problem for visibility and delays new content from appearing in search results.

2. Poor Search Rankings

Duplicate content confuses search engines and makes it hard to evaluate which version deserves priority.

So, as a result, instead of consolidating authority, ranking signals spread thinly across similar pages.

In simpler words, neither the page performs well, and the overall visibility suffers.

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Remember, both the search engines and the readers prioritize originality. Therefore, if you tend to publish duplicated or plagiarized work, you can never earn any credibility or authority.

3. Reduced Organic Traffic

Duplicate content even rescues the content’s search visibility.

Confused how?

So, when two similar pages compete, the organic traffic is split between them, so neither can rank strongly despite all the efforts.

Even when you believe that your content is better, if both pages address the same query, users may find weaker versions instead of the most valuable page.

All in all, it's the website traffic that makes it recognizable and attracts visitors, but duplication interrupts that path.

4. Negative User Experience

Duplicate content not only lowers your site's ranking but also frustrates visitors and reduces trust. Instead of clarity, users encounter redundancy and confusion.

Just think of it yourself: if you go through multiple pages to collect valuable insights about a topic, just to realize that they all show identical information, won't it frustrate you?

Obviously, it does.

You will leave the site without giving the content a second glance, right?

Hence, it increases the bounce rate and weakens the overall Google ranking of the site.

Tips To Fix Content Duplication for Better SEO Rankings

If you don't want to fall into the trap of harmful duplicate content, then here are some practical tips that you can follow to effectively fix the duplication issues and boost your page visibility, authority, and ranking in no time.

1. Use 301 Redirects

A 301 redirect signals to search engines which version of a page should be prioritized.

Therefore, this indeed consolidates authority and improves rankings. Moreover, it also ensures that the traffic only flows to one optimized page.

For instance, if you have both HTTP and HTTPS versions of the website, you can use the redirect to send users and search engines to the secure version.

Similarly, merging two overlapping blog posts into one guide prevents diluted signals.

So, the 301 redirects can also be used to stop users from landing on outdated or less useful pages.

Thus, this surely creates a seamless experience, reduces internal competition, and strengthens SEO.

Permanent redirects ensure one authoritative version dominates while maintaining user trust and consistent search visibility.

2. Implement Canonical Tags

Canonical tags are used to identify the main version of a page when multiple copies of the same content exist.

Honestly, this method is most useful for sites with multiple URLs pointing to the same content, such as e-commerce product pages with tracking parameters.

Wondering how it works?

Basically, adding a canonical tag tells search engines to treat one URL as authoritative, ignoring all the other ones. Thus, this helps prevent signal dilution.

Unlike redirects, duplicates remain accessible to users, but only the main version gains SEO value. This improves crawl efficiency, reduces confusion, and keeps indexing clean.

3. Apply Meta Robots Noindex Tag

You can also use the meta robots noindex tag to effectively prevent the search engines from indexing certain pages.

Once the crawler enters the page and sees the noindex tag, it will immediately leave the page without indexing it. This surely helps you save crawl budget and ensure only the right, authoritative pages are ranked.

The best part here is that, though these pages are not indexed, users can still access them.

Noindex tags are easy to apply in a page’s head section and serve as a simple, effective safeguard for preserving clarity, authority, and ranking strength.

4. Consolidate Multiple URLs

Do you know what the main reason is for multiple duplicated pages appearing on your site, even when you haven't created them manually?

This usually happens when a single page generates multiple URLs through session IDs, filters, or parameters.

Therefore, consolidating these variations into one version prevents internal competition and strengthens SEO authority.

Thus, this approach surely saves crawl budget, improves clarity, and enhances user navigation.

Not only this, but consolidation is also beneficial for e-commerce websites to manage their complex URL structures.

5. Audit and Merge Similar Blog Posts

Content audits reveal overlapping blog posts targeting the same topics. Instead of keeping duplicates, merging them into one comprehensive guide strengthens SEO authority.

This reduces internal competition, improves ranking signals, and creates a more valuable resource for readers. Merging also prevents backlink dilution and consolidates trust.

Regular audits help identify weak or outdated posts that can be updated or redirected.

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Consistent originality ensures every page delivers unique value, builds credibility, and positions your website as a trusted source of expertise.

6. Use a Plagiarism Checker

One of the biggest risks of duplication is publishing content that unintentionally overlaps with existing material.

Even if it’s not deliberate, search engines still see it as duplicate content, which harms your rankings.

Relying solely on manual checks isn’t enough, because small copied fragments or paraphrased ideas can easily slip through.

This is where a free plagiarism checker serves as your ultimate safeguard. It scans and compares your text against billions of online sources to identify even the slightest traces of duplication. By using this tool, you can confidently ensure that your work is completely original and truly represents your own ideas.

Conclusion

Strong SEO depends on control, and duplicate content is simply a signal that power is slipping. Search engines notice when your site lacks focus, and so do your visitors. Treat every page as a unique asset that speaks for your brand. When your content is distinct, it not only performs better in rankings but also communicates authority and trust. Think of duplication as wasted potential, valuable space that could instead elevate your site. Protect that space, keep it original, and your website becomes a reliable source worth both finding and following.