Intro

Generative engines do not discover, read, or interpret your website the same way traditional search crawlers do.

GoogleBot, BingBot, and classic SEO-era crawlers focused on:

-

URLs

-

links

-

HTML

-

metadata

-

indexability

-

canonicalization

Generative engines, however, focus on:

-

content visibility

-

structural clarity

-

render completeness

-

JavaScript compatibility

-

chunk segmentation

-

semantic boundaries

-

entity detection

-

definition extraction

If LLM-based crawlers cannot fully crawl and fully render your content — your information becomes:

-

partially ingested

-

incorrectly segmented

-

incompletely embedded

-

misclassified

-

excluded from summaries

This article explains the new rules for crawlability and rendering in the GEO era — and how to prepare your site for AI-driven ingestion.

Part 1: Why Crawlability and Rendering Matter More for LLMs Than for SEO

Traditional SEO cared about:

-

“Can Google access the HTML?”

-

“Can the content load?”

-

“Can search engines index the page?”

Generative engines require significantly more:

-

fully rendered page content

-

unobstructed DOM

-

predictable structure

-

stable semantic layout

-

extractable paragraphs

-

server-accessible text

-

low-noise HTML

-

unambiguous entities

The difference is simple:

Search engines index pages. LLMs interpret meaning.

If the page partially renders, the crawler gets a fragment of meaning. If the crawler gets a fragment of meaning, AI produces incorrect or incomplete summaries.

Crawlability determines access. Rendering determines comprehension. Together, they determine generative visibility.

Part 2: How Generative Models Crawl Websites

Generative crawlers use a multi-stage pipeline:

Stage 1: Fetch

The engine attempts to retrieve:

-

HTML

-

CSS

-

JS

-

metadata

If the response is blocked, delayed, or conditional, the page fails ingestion.

Stage 2: Render

The engine simulates a browser environment to produce a complete DOM.

If the page requires:

-

multiple JS events

-

user interaction

-

hydration

-

complex client-side rendering

…the crawler may miss essential content.

Stage 3: Extract

Post-render, the engine extracts:

-

paragraphs

-

headings

-

lists

-

FAQ blocks

-

schema

-

semantic boundaries

Extraction determines chunk quality.

Stage 4: Segment

Text is split into smaller, meaning-pure blocks for embeddings.

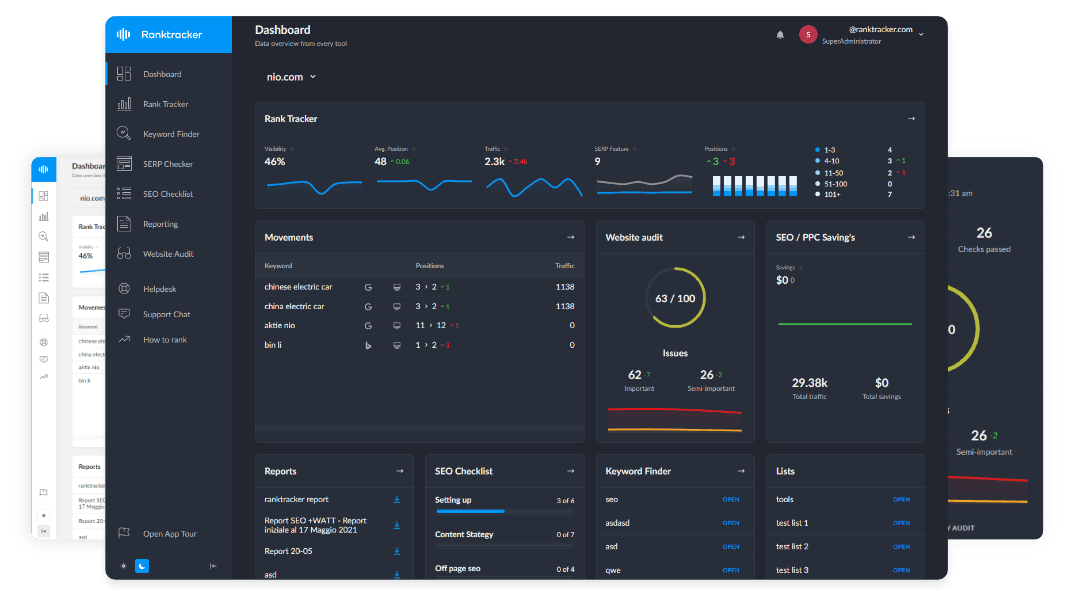

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Poor rendering creates malformed segments.

Stage 5: Embed

The model transforms each chunk into a vector for:

-

classification

-

clustering

-

generative reasoning

If chunks are incomplete, embeddings become weak.

Part 3: Crawlability Requirements for Generative Models

Generative models have stricter crawl requirements than search engines ever did. Here are the essential technical rules.

Requirement 1: No Content Hidden Behind JavaScript

If your primary content loads via:

-

client-side rendering (CSR)

-

heavy JS injection

-

post-load hydration

-

frameworks that require user interaction

AI crawlers will see nothing or only partial fragments.

Use:

-

SSR (server-side rendering)

-

SSG (static generation)

-

hydration after content load

Never rely on client-side rendering for primary content.

Requirement 2: Avoid Infinite Scroll or Load-on-Scroll Content

Generative crawlers do not simulate:

-

scrolling

-

clicking

-

UI interactions

If your content appears only after scrolling, AI will miss it.

Requirement 3: Eliminate Render-Blocking Scripts

Heavy scripts can cause:

-

timeouts

-

partial DOM loads

-

incomplete render trees

Generative bots will treat pages as partially available.

Requirement 4: Make All Critical Content Visible Without Interaction

Avoid:

-

accordions

-

tabs

-

“click to reveal” text

-

hover-text blocks

-

JS-triggered FAQ sections

AI crawlers do not interact with UX components.

Critical content should be in the initial DOM.

Requirement 5: Use Clean, Minimal HTML

Generative rendering systems struggle with:

-

div-heavy structures

-

nested wrapper components

-

excessive aria attributes

-

complex shadow DOMs

Simpler HTML leads to cleaner chunks and better entity detection.

Requirement 6: Ensure NoScript Fallbacks for JS-Heavy Elements

If parts of your content require JS:

Provide a <noscript> fallback.

This ensures every generative engine can access core meaning.

Requirement 7: Provide Direct HTML Access to FAQs, Lists, and Definitions

AI engines prioritize:

-

Q&A blocks

-

bullet points

-

steps

-

micro-definitions

These must be visible in raw HTML, not generated via JS.

Part 4: Rendering Requirements for Generative Models

Rendering quality determines how much meaning AI can extract.

Rule 1: Render Full Content Before User Interaction

For LLM crawlers, your content must render:

-

instantly

-

fully

-

without user input

Use:

-

SSR

-

prerendering

-

static HTML snapshots

-

hybrid rendering with fallback

Do not require user actions to reveal meaning.

Rule 2: Provide Render-Stable Layouts

AI engines fail when elements shift or load unpredictably.

SSR + hydration is ideal. CSR without fallback is generative death.

Rule 3: Keep Render Depth Shallow

Deep DOM nesting increases chunk confusion.

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Ideal depth: 5–12 levels, not 30+.

Rule 4: Avoid Shadow DOM and Web Components for Primary Text

Shadow DOM obscures content from crawlers.

Generative crawlers do not reliably penetrate custom elements.

Avoid frameworks that hide text.

Rule 5: Use Standard Semantic Elements

Use:

-

<h1>–<h4> -

<p> -

<ul> -

<ol> -

<li> -

<section> -

<article>

AI models heavily rely on these for segmentation.

Rule 6: Ensure Schema Renders Server-Side

Schema rendered via JS is often:

-

missed

-

partially parsed

-

inconsistently crawled

Put JSON-LD in server-rendered HTML.

Part 5: Site Architecture Rules for Generative Crawlability

Your site structure must help — not hinder — LLM ingestion.

1. Flat Architecture Beats Deep Architecture

LLMs traverse fewer layers than SEO crawlers.

Use:

-

shallow folder depth

-

clean URLs

-

logical top-level categories

Avoid burying important pages deep in the hierarchy.

2. Every Key Page Must Be Discoverable Without JS

Navigation should be:

-

plain HTML

-

crawlable

-

visible in raw source

JS navigation → partial discovery.

3. Internal Linking Must Be Consistent and Frequent

Internal links help AI understand:

-

entity relationships

-

cluster membership

-

category placement

Weak linking = weak clustering.

4. Eliminate Orphan Pages Entirely

Generative engines rarely crawl pages with no internal pathways.

Every page needs links from:

-

parent cluster pages

-

glossary

-

related articles

-

pillar content

Part 6: Testing for Generative Crawlability

To verify your pages are generative-ready:

Test 1: Fetch and Render with Basic User Agents

Use cURL or minimal crawlers to check what loads.

Test 2: Disable JS and Check for Core Content

If content disappears → generative unreadable.

Test 3: Use HTML Snapshots

Ensure everything important exists in raw HTML.

Test 4: LLM “What’s on this page?” Test

Paste your URL into:

-

ChatGPT

-

Claude

-

Gemini

-

Perplexity

If the model:

-

misreads

-

misses content

-

assumes meaning

-

hallucinated sections

Your render is incomplete.

Test 5: Chunk Boundary Test

Ask an LLM:

“List the main sections from this URL.”

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

If it fails, your headings or HTML structure are unclear.

Part 7: The Crawlability + Rendering Blueprint (Copy/Paste)

Here is the final checklist for GEO technical readiness:

Crawlability

-

No JS-required content

-

SSR or static HTML used

-

No infinite scroll

-

Minimal scripts

-

No interaction-required components

-

Content visible in raw HTML

-

No orphan pages

Rendering

-

Full content loads instantly

-

No layout shifts

-

No shadow DOM for primary content

-

Schema is server-rendered

-

Semantic HTML structure

-

Clean H1–H4 hierarchy

-

Short paragraphs and extractable blocks

Architecture

-

Shallow folder depth

-

Crawlable HTML navigation

-

Strong internal linking

-

Clear entity clustering across site

This blueprint ensures generative engines can crawl, render, segment, and ingest your content accurately.

Conclusion: Crawlability and Rendering Are the Hidden Pillars of GEO

SEO taught us that crawlability = indexability. GEO teaches us that renderability = understandability.

If your site is not:

-

fully crawlable

-

fully renderable

-

structurally clear

-

consistently linked

-

semantically organized

-

JS-optional

-

definition-forward

…generative engines cannot extract your meaning — and you lose visibility.

Crawlability gives AI access. Rendering gives AI comprehension. Together, they give you generative visibility.

In the GEO era, your site must not only load — it must load in a way AI can read.