Intro

Generative AI is changing how people discover brands. If your goal is a more substantial AI presence, you need to know what matters beyond classic rankings.

AI experiences are not a sideshow. In March 2025, 18% of Google searches surfaced an AI summary, which means visibility inside the answer now shapes discovery and the best AI Search Visibility Platforms show where your brand stands in this new landscape.

You need to measure how often and how positively your brand appears in AI-generated answers across Google AI Overviews, ChatGPT, Perplexity, and Microsoft Copilot. With Google rolling out AI Overviews widely and those summaries driving link clicks, real traffic, and brand exposure flow through AI answers.

What “Brand Visibility” Means in Generative AI Search

In traditional SEO, visibility meant where you ranked and the traffic you won. In AI search, it means how your brand shows up inside the answer as a mention, citation, or recommended option.

Since AI summaries answer directly and cited sources get clicked only about 1% of the time, the first win is being named and described correctly.

Track mentions and citations across engines, not just blue links, so your brand is present, accurate, and favorably framed inside the response.

The Measurement Stack: 10 Core Metrics That Actually Matter

Use these metrics as your shared language with stakeholders. Keep them simple, repeatable, and comparable across engines.

1) AI Brand Visibility (ABV)

How often your brand appears in AI answers across a defined prompt set.

-

Formula: ABV = brand appearances ÷ total AI answers for your prompts.

-

Count mentions and citations. Track by platform to spot where you lead or lag.

-

Why it matters: multiple sources agree visibility should be measured at the answer level, not only by SERP rank

Agencies like Digital Elevator help brands track how often they’re cited in LLM-generated responses, enabling them to improve their AI Brand Visibility (ABV) and citation exposure.

2) AI Share of Voice (AI SOV)

Your brand’s relative presence compared to competitors inside AI answers.

-

Formula: AI SOV = your mentions ÷ all competitor mentions for the same prompts.

-

Use it to benchmark category position and track gains weekly or monthly.

3) Citation Frequency

How often your domain is linked or named as a source in the answer.

-

Track by engine and by page type (homepage, product, blog).

-

High citation frequency signals authority in generative systems.

4) Citation Exposure Score (CES)

Not all citations are equal. Create a weighted score based on position and number of citations surfaced in the answer.

-

Example weights: first citation = 1.0, middle = 0.6, endnotes = 0.3.

-

Sum weights across prompts to compare content assets.

5) Prominence in the Answer

Was your brand in the lead paragraph, a bulleted recommendation, or a footnote? This is different from the citation position.

-

Score: lead mention = 2, body mention = 1, footnote only = 0.5.

-

Use alongside CES to prioritize content that moves up‑stack in the summary.

6) Context and Sentiment Accuracy

Was your brand described correctly and in a positive or neutral tone? Label answers as Positive, Neutral, or Negative and track misstatements to fix with content and entity work. Guidance for assessing quality signals and E‑E‑A‑T still applies here.

7) Query Coverage

How many unique intents feature your brand: definitions, comparisons, “best” lists, how‑tos, local queries, and post‑purchase help. Broader intent coverage means more ways customers can encounter you.

8) Platform Coverage

Coverage across Google AI Overviews, ChatGPT, Perplexity, and Copilot/Gemini. Google notes AI Overviews are reaching hundreds of millions of users, so include them in every audit.

9) Engagement Proxies

AI answers don’t always yield clicks, but you can watch follow‑up questions, source verification clicks, brand search lift, and direct traffic spikes after exposure. Treat these as directional signals of brand lift from AI.

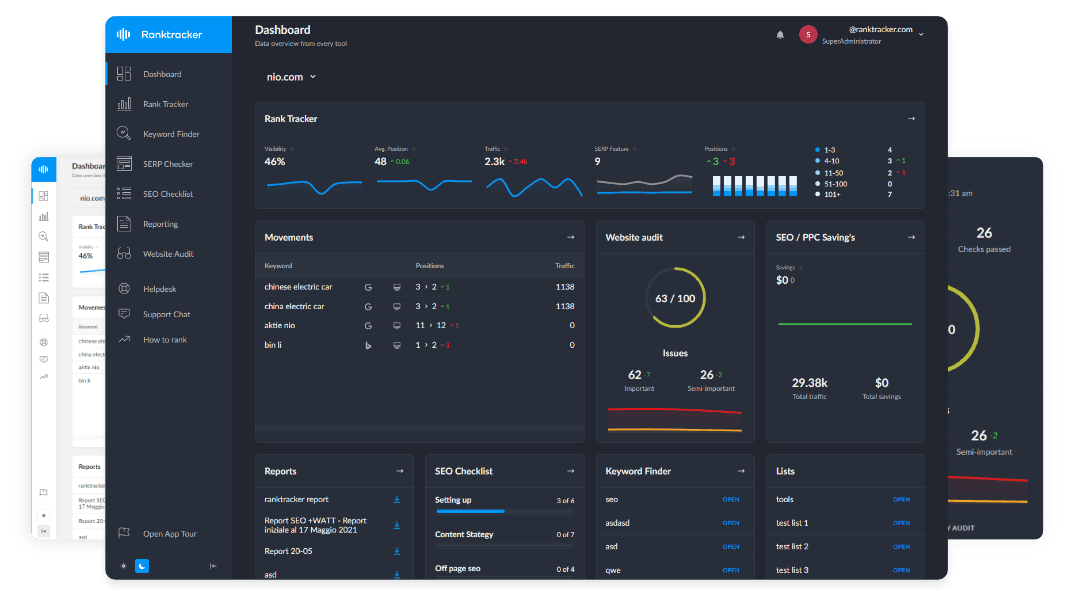

The All-in-One Platform for Effective SEO

Behind every successful business is a strong SEO campaign. But with countless optimization tools and techniques out there to choose from, it can be hard to know where to start. Well, fear no more, cause I've got just the thing to help. Presenting the Ranktracker all-in-one platform for effective SEO

We have finally opened registration to Ranktracker absolutely free!

Create a free accountOr Sign in using your credentials

Traditional link clicks fall to 8% on pages with AI summaries, compared with 15% without them. That is exactly why we measure** AI Brand Visibility** and** AI SOV** at the answer level.

10) Freshness and Update Cadence

Models favor current, accurate information. Track whether AI answers cite your newest pages vs older ones and set a content refresh schedule for high‑value topics.

A Simple Scoring Framework You Can Copy

Use a 100‑point score so you can report progress in plain English:

-

Visibility (30 pts): ABV trend (15), AI SOV trend (15).

-

Authority (30 pts): Citation Frequency (15), CES (15).

-

Quality (20 pts): Prominence (10), Sentiment/Accuracy (10).

-

Footprint (20 pts): Query Coverage (10), Platform Coverage (10).

Update the score monthly with weekly spot checks. Keep the prompt set stable so trend lines are meaningful.

How to Build Your Prompt set

Create a 50‑prompt test that mirrors the customer journey:

-

Category definitions: “What is [category]?”

-

Comparisons: “[Brand] vs [Brand]” and “best [product] for [use case]”

-

Jobs to be done: “how to [task] with [category]”

-

Local intent: “best [service] near me” or “[city]” variants

-

Brand queries: “is [Brand] good for [use case]”

Write prompts in natural language and include conversational variants. Log the exact prompt, engine, visibility outcome, citations, prominence, sentiment, and notes for follow‑up tests.

Generative experiences like AI Overviews are designed for complex questions and follow‑ups, so include multi‑part prompts and compare how engines handle them.

Recommended Tools for Tracking

You can start with a spreadsheet and a routine, but specialized tools speed up tracking and benchmarking.

-

CheckThat (Free + preloaded for B2B categories): CheckThat is an AI visibility platform built for B2B software buying categories. It comes preloaded with mapped categories, researched brands, and a shared, human-reviewed prompt library, so you avoid the usual “blank dashboard” setup. The free tier is genuinely useful for getting oriented fast, then you can upgrade if you want more custom prompt tracking and deeper historical trends.

-

Wix AI Visibility Overview: Track mentions, citations, competitor comparisons, and AI traffic in one place; useful if your site runs on Wix. Their rundown of 13 tools also highlights options like Peec AI, Otterly.AI, Profound, and Semrush AI Toolkit for broader stacks.

-

Superlines: Provides dashboards around AI Brand Visibility, AI SOV, and Citation Frequency with a measurement framework aligned to generative search.

-

Community and practitioner roundups: Practitioner guides catalog tools and approaches across ChatGPT, Perplexity, Gemini, and Copilot. Use them to compare platforms and to shape your own prompt library.

If you’re not ready to buy software, replicate these capabilities with a prompt schedule and a logging template. You can expand to automation once you know which metrics drive results for your category.

Tie AI Visibility to Brand Health and Demand

Generative search is new, but the brand fundamentals still apply. Layer brand awareness metrics on top of your AI visibility score to show business impact:

-

Unaided and aided awareness via recurring consumer surveys.

-

Share of voice and share of impressions across channels.

-

Branded search volume and referral traffic from cited sources.

This pairing lets you tell a clear story: we improved ABV and AI SOV, and we saw a lift in direct brand searches and unaided recall in the next wave of tracking.

What Great Measurement Uncovers (And How to Act on It)

1) You’re mentioned but not cited

Action: publish or update a citation‑worthy page. Add a clear, answer‑ready summary up top, reinforce claims with references, and use FAQ sections. Many AI visibility guides stress that well‑structured, factual pages increase the chance of being cited.

2) You’re cited, but low in the list

Action: increase your Prominence and CES by improving entity clarity and structured data (Organization, FAQ, Product, Review). Consistency across Wikidata, LinkedIn, Crunchbase, and your site helps models interpret your brand correctly.

3) You win on one engine but lose on another

Action: expand your footprint. Perplexity tends to surface research‑style sources, while AI Overviews blend quick summaries with links. Tailor assets to each environment and keep testing multi‑step prompts.

4) You’re absent on “best” and “top” queries

Action: ship comparison pages and buyer’s guides that map to how users ask AI for recommendations. Include concise verdicts and transparent criteria. This content repeatedly shows up in optimization advice for LLM‑driven discovery.

A 30‑day plan to operationalize AI visibility

Week 1: Baseline

-

Pick 50 prompts and 4 engines.

-

Run tests and log ABV, AI SOV, citations, prominence, and sentiment.

-

Build your first 100‑point AI Visibility Score.

Week 2: Fix the leaks

-

Identify pages that get mentions without citations.

-

Add answer boxes, FAQ schema, and missing sources.

-

Refresh any page older than 12 months that targets a key prompt.

Week 3: Expand coverage

-

Add comparison and best‑of content for 5 high‑intent prompts.

-

Claim and align your entity data across Wikidata, GMB, LinkedIn, Crunchbase.

Week 4: Track and report

-

Re‑run the prompt set.

-

Show movement in ABV, AI SOV, and CES by engine.

-

Pair with brand awareness deltas where available to connect to pipeline.

Common Pitfalls to Avoid

-

Over‑relying on traditional SEO KPIs. Clicks and CTR still matter, but they undercount brand exposure inside AI answers. Track answer‑level visibility and citations to close the gap.

-

Measuring only one engine. Your audience may rely on a mix of Google, ChatGPT, Perplexity, and Copilot. Measure across the set.

-

Ignoring structure and entities. LLMs parse clear headings, definitional content, and schema more reliably, which improves recall and citations.

-

Letting content go stale. Fresh, accurate pages are more likely to be cited in dynamic answers.

Sessions end 26% of the time after an AI summary, compared with 16% without one, so focusing on CTR alone undercounts the exposure your brand earns inside the answer.

Your Living Dashboard: What to Show Leadership

Keep your dashboard lean and consistent:

-

AI Visibility Score (out of 100) with a one‑line explanation.

-

ABV and AI SOV by engine.

-

Top 10 cited pages with CES and freshness date.

-

New entity fixes shipped this month.

-

Brand health overlays: unaided recall, branded search, and referral clicks from AI‑cited sources.

This view answers the two questions leaders care about: are we showing up and does it move the market.

Final Word:

The brands that win in generative search are the ones that measure the answer, not just the SERP. Put AI Brand Visibility, AI Share of Voice, and Citation Frequency at the heart of your reporting, then fix what the data reveals: structure your content for answers, tighten your entities, refresh your most cited pages, and expand your prompt coverage.

Google is expanding AI Overviews, and users are engaging with links inside those answers. That means the upside is real for teams that track and improve their presence. Build the scorecard, run the prompts, and iterate. That’s how you earn and hold the best AI Search Visibility in your category this year.